As a precursor to this guide, I thought I’d give you a bit of backstory. You can skip the prologue if you aren’t interested in the backstory and just want the juicy details. If you’re a company looking to get THE best ECommerce SEO Agency in the world ranking your site or an agency looking to white label us, then you can get in touch with my team here.

This is probably the most indepth guide I have ever created, and I am giving away a lot of the tactics we use to rank large sites. If you learned anything (Which I’m pretty sure you will) from this guide, dropping me a share on it would be awesome.

Table of Contents

The Prologue

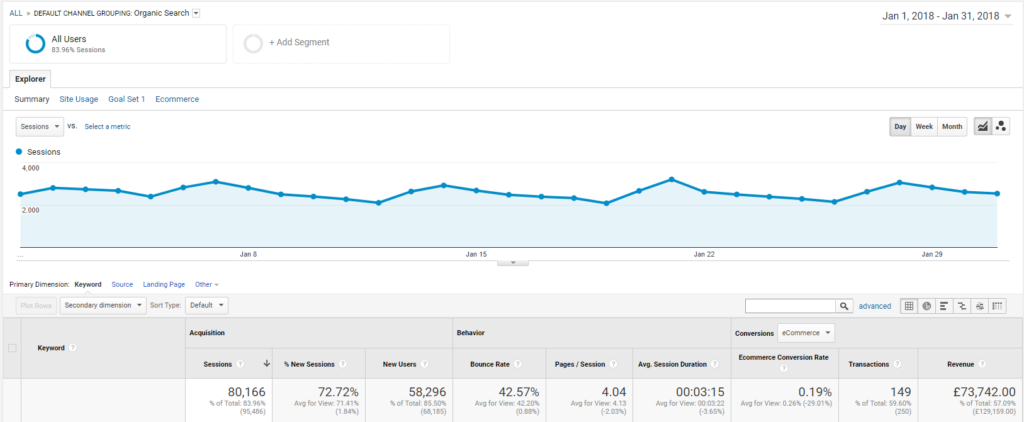

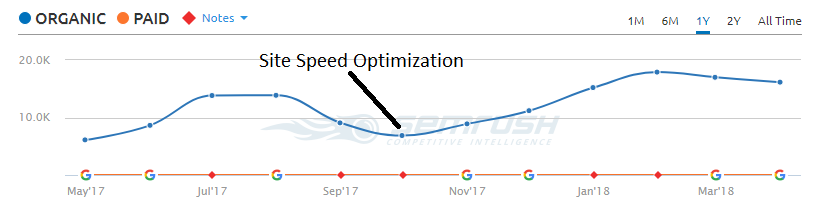

2 years ago, I got a call from my mum’s accountancy agency saying they had a client looking for a new SEO agency. They’d been hit with an algorithmic penalty by their old agency, who’d spent months trying to fix it to no avail. We come in, fix the penalty and take the site to more traffic than it has ever had before:

You can see the algo penalty slowly churning traffic down from 50k/Mo to under 25k.. A HUGE amount of business lost for the company. We came in, recovered traffic back to the 50k mark (Algo penalties are notoriously harder to remove than manual, especially in the pre-rolling days) and have since managed to get it back on track to that 100k mark, peaking in January this year at over 78k, which meant an organic revenue of £73,742:

Obviously the above doesn’t include the additional revenue generated from retargeting ads and phone sales.

Since taking on this client, we built a brand, grew the agency and signed a bunch more clients.. All on a revenue share basis. I only work on revenue share (or PPL/goal monthly retainer increases) because it’s the only real way to make money in client SEO, without continually having to take on new clients – We made over £35,000 (~$49,000) from the above client alone in 2017, and are on track to over double that this year.

We’re now standing pretty tall with a great client base and a growing UK based team – All of which will be in attendance at the Chiang Mai Conference this year, so make sure to grab a few words with them if you’re in attendance.

The Tools

Realistically, we only internally use a very select few tools. You can mix and match these in your own ventures, and for link building you may require several other tools, though we mostly outsource our link building to vendors who’re specifically good at those types of links.

Ahrefs

This is a MUST HAVE tool, it’s the best SEO suite on the market right now. I’ll be using this tool throughout this post and it’s a must for anyone looking to do ECommerce SEO seriously, plus all of the specific tutorials in this guide are using this tool. No affiliate links, though full transparency, Tim did recently give us a free agency account.

We require this tool for backlink checking, keyword research (they have the best keyword research tool on the market), organic position checking and competitor analysis.

An honorable mention goes to Ahrefs Content explorer, it’s helped us analyze content from some of our big brand competitors easily. Though we’ve found BuzzSumo to be considerably better, though we can’t justify the amount we use it vs the monthly cost.

SEMRush and Moz both have similar tool suites that you can use instead of the Ahrefs examples I use, but the data Ahrefs has is always ahead of both of them.

Office

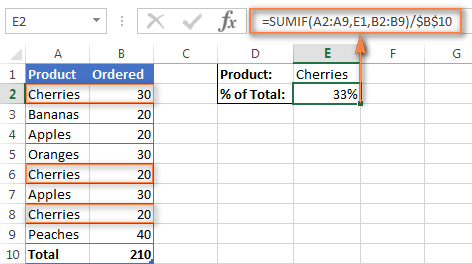

Spreadsheets and SOP PDFs are crucial for your campaign. We use them on a daily basis to track, monitor and coordinate data.

I’ll be showing you the functionalities I use in Excel in this post and exactly how I do it, so no worries if you aren’t very proficient.

Rank Tracker

There are a ton of different options on the market. Ahrefs also has one built in, though it leaves a lot to be desired.

We use SE Ranking, which also come in handy for smaller sites as it has a semi-decent builtin site auditing tool and can track maps etc.. too.

OnPage Auditing

We use several different tools for OnPage auditing:

- DeepCrawl – This is for our OnPage scans, DeepCrawl is the best auditing tool on the market right now but does come with a pretty heavy price tag. If you can’t afford the monthly subscription just pick up a copy of Screaming Frog instead.

- Content King – This allows us to monitor real time changes on our client sites, everything from singular words or links changing to entire pages getting 404’d. It really helps with clients that have trigger happy developers or lack foresight to notify us of big changes, as we get notifications every day of any changes made on the site. It also allows us to make sure the changes we request are being made, and in a reasonable time frame.

- NetPeak Spider – We use this tool for internal linking. It gives you an overview of the number of internal links a page has, the anchor texts it has and an internal “PageRank” score which allows you to plan out internal linking campaigns better. Note that it does pick up menu, footer and sidebar links when scanning so you want to be prepared to get rid of these when collating your data. It’s also got a ton of other features but DeepCrawl is better for those.

That’s pretty much all the tools we use inhouse. There are a few other bits and pieces we use for specific tasks or link building, on the odd occasion we don’t outsource it.

Initial Factors

There are two things you want to look at prior to carrying out your audits and strategic planning, because they can massively affect your campaign.

What CMS Is The Site On?

Depending on the varying CMSs, you’ll run into some problems.

- Magento – Still the best ECommerce CMS there is, but it has problems with automated meta titles (often inserting the same meta title for every page without doing a custom one or using an addon), lack of canonical tags on pages (if you see sidebar filtering, it creates new query pages that don’t canonical back to the main page, in turn creating exact duplicate pages) and can often have bad load times if the site isn’t on a dedicated server or cloud hosting + lacks standardized image compression.

- Shopify – You can’t access server logs, it’s really bad for any sort of link / content asset creation because you often can’t hardcode pages (without the super expensive pricing options) and lacks a lot of URL customization.

- WooCommerce – Obviously, WordPress wasn’t originally built for ECommerce, so it entails it’s own actual EComm problems, BUT that does mean you’ll likely be able to optimize WordPress much easier. It does however tend to have bulky load times with so many plugins required to make the whole thing work and requires some hard coding for optimizing content on category pages if you can’t optimally use a theme or content building plugin that allows you to do this.

That’s a few examples of CMSs, there is too many others to mention but 99% of sites will be on the above 3, or using some sort of custom CMS.

Is This A New Site Or An Old Site?

If you’re on a pre-existing site, it tends to be much easier with E-Commerce SEO because you will likely have pre-exisiting keyword/conversion data and a platform to optimize around, rather than having to optimize from scratch.

Brand new sites will also take some considerable more work, because you can’t really back your keyword research off good converting pages etc.

Initial Auditing

Before you do any SEO work, you want to go ahead and start auditing the site (both OnPage and link profile) to begin building an overall strategy – Remember, most EComm sites are thousands (or tens of thousands) of pages and will require work and growth over a sustained period of time. You can see fast results, but you’ll likely see everything kick in over time where you do a checklist of tasks to improve the site as a whole, whilst targeting individual pages throughout.

I’ll be covering a lot of these in detail later on, but if I were to explain each point in explicit detail then it’d take about 2 weeks worth of writing.

This is our standardized checklist for our initial audit:

Initial OnPage Audit Checklist

Obviously, you should be taking all of these points and noting everything down to further implement down the line.

- Check Webmaster Tools For Issues – Just export any issues into a document that WMT is flagging such as 404s, crawl errors, wrong country targeting, no sitemap submitted and so on.

- Check The Index Status – Check how many pages the site has indexed in Google (via the site: operator), check the robots.txt if we’re blocking wrong pages or are not blocking pages we should and look at the brand related keywords in Google as well as the brand + main keywords to check for any cannibalization issues for the main keywords.

- Check URL Structure – Make sure it’s not using any stupid, default CMS URLs and the URL structure is setup correctly and well optimized. We don’t want ridiculously long URLs either, and doing internal 301s of old URLs to new ones doesn’t lose any juice as long as you reindex them as quickly as possible.

- Check Pages For Unique Content – Make sure the pages that require it (category, products etc) aren’t using any duplicate content from other sites and have unique content on them. Also make sure (if you’re doing client SEO) that the clients aren’t using duplicated blog posts from other sites. We have seen a LOT of sites just copying newspaper articles and the like.

- Implement Sitewide Automated Meta Titles – We’ll be manually re-working the page titles for the pages that need them later on, but for now we want to setup a default automated meta title for each type of page. e.g. for category pages we could use: Buy {Category Name} Online At {Brand Name} {Country} – You want to do this for category and product pages at least.

- Check Homepage For Keyword Cannibalization – Most of the time you’ll want to be ranking internal pages for there specific keywords. A lot of companies use these keywords on their homepage meta title and content, cannibalizing the actual page you want to be ranking for. Make sure this isn’t happening.

- Check For Schema Markup – A lot of pages will benefit from review stars, address schema, blog posts etc.. You can run pages through Google’s Tool here to double check if there is any active schema on the page, and if it’s setup correctly.

- Check For 404s – You can use site auditing tools (like Screaming Frog, Ahrefs and DeepCrawl) as well as Webmaster Tools to check for any 404s and note them into an Excel file so you can find relevant pages to 301 them at later. I’ll be covering 404s in more detail later on.

- Check Load Times – Google is putting more & more importance on load times and our correlated data has seen a ton of improvements for load time optimization. Just run the homepage, a cat page and a product page through GTMetrix & Pingdom to make sure there’s no crazy load times going on.

Once you’ve done these initial checks you should have a generic overview of the site’s OnPage state. You’ll want to routinely do audits throughout the year on any large enough ECommerce site though.

Initial Link Profile Audit

This data is going to be the foundation of your link building campaign. Does the site need a disavow? Is the anchor text to pages over optimized? Are pages lacking links? And so on.

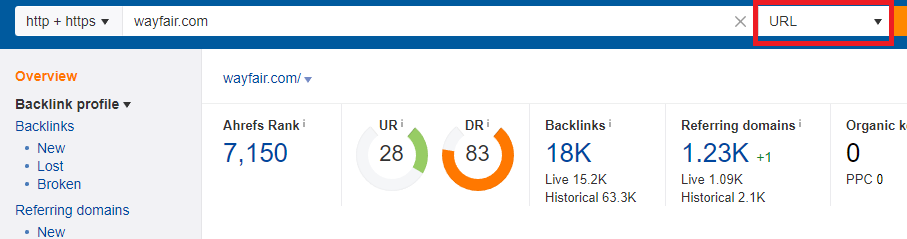

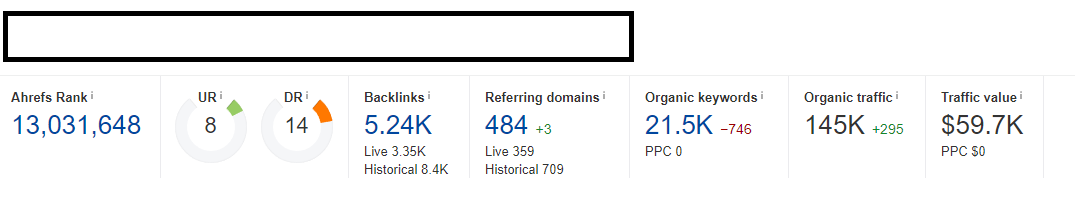

So first you want to run your homepage URL through Ahrefs using the “Exact URL” specification:

This will give you an overview of all the links the homepage has, and mostly importantly give you an anchor cloud to see if the homepage has over optimized anchor text, or any shady negative SEO going on. Wayfair’s homepage bubble is an ideal anchor ratio:

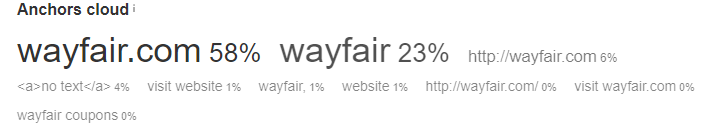

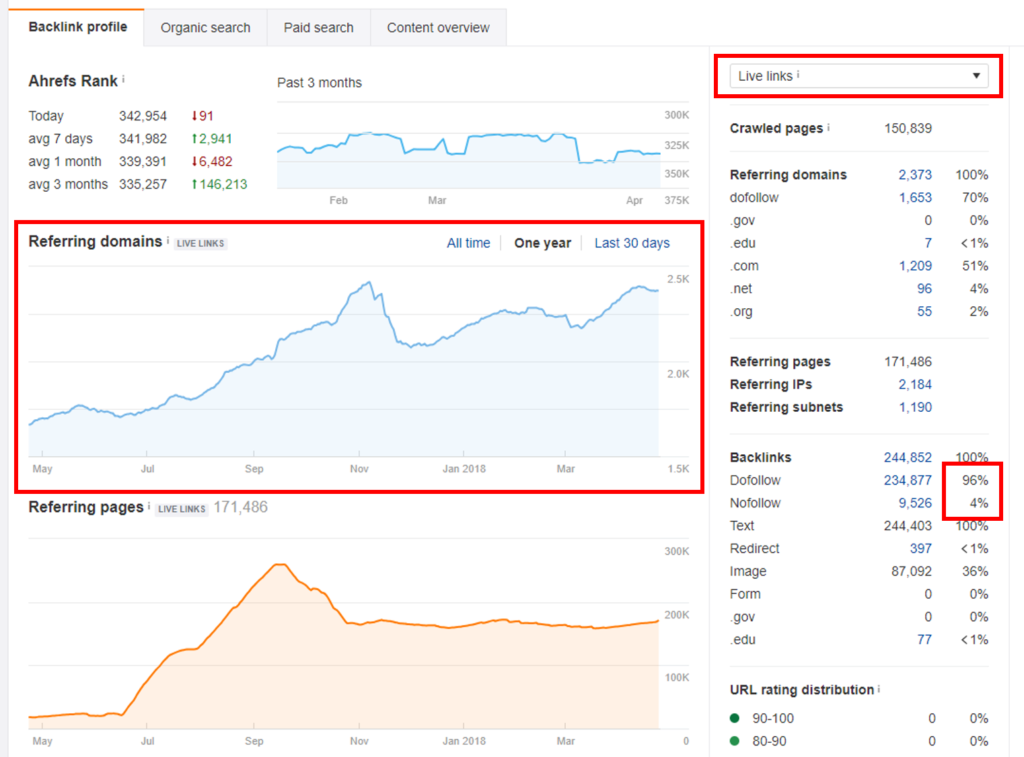

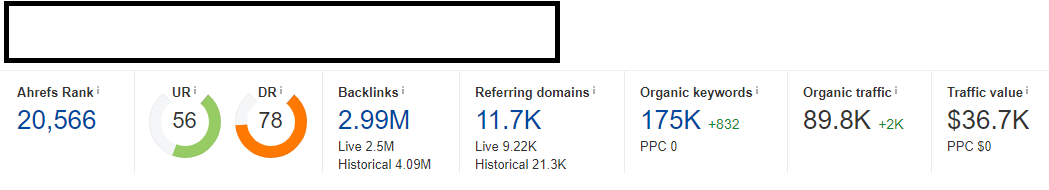

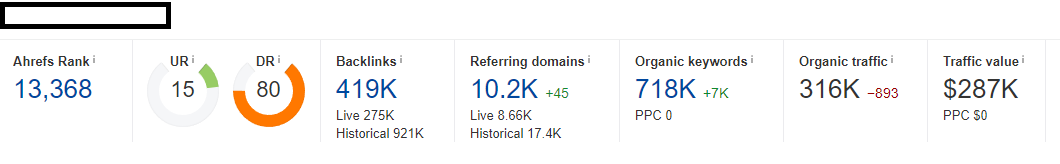

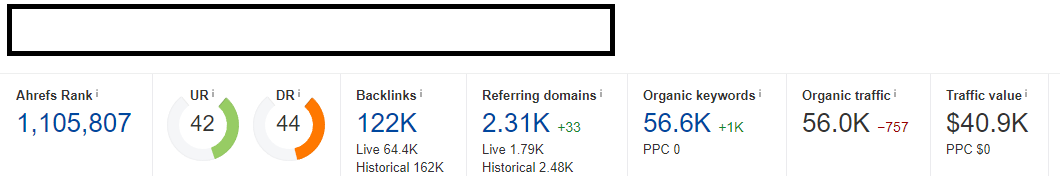

Next you’re going to want to run the full domain (In this case I did “domain without subdomains”, as they had a ton of image links going to the image. subdomain of the site) now:

I’ll be using the above site as an example in this post, as it’s a pretty big site in the UK but they mostly focus on AdWords so has a bit to be desired SEO wise.

On the overview panel you want to switch to “Live Links” so you’re only getting the data Ahrefs is currently picking up, by default it’s switched to “Recent Links” which is not the full data profile:

You then want to check the link velocity of the number of referring domains going up/down – This specific link profile has decent growth but it has not continued in the past 6 months or so and dropped a significant amount of links over the November period. You want a sustained upwards graph so your site is continually growing in authority.

Next you want to check the DoFollow / NoFollow ratio – This specific link profile is a bit high on the DoFollow site and could look to be balanced out with some more NoFollow links, but it’s nothing to majorly worry about.

You can also check the URL rating distribution and make sure you don’t have 95% of your links in the 0-10 URL Rating section, which these guys do have – This is mostly down to a bunch of weird auto-generated links like these:

- http://new-home-trends.com/house-doctor-tv-design-guru-julia-kendell-on-seamless-floors-matt-kitchens-and-mattresses/

- http://content.yudu.com/Library/A1xfqe/YourGuideToTheHospit/resources/14.htm

- https://storify.com/altanlama1988/language-immersion-for-chrome-direct-link-download

This would indicate me to look at running a disavow file for this site. It has a lot of crappy and duplicate links, likely hurting the overall site.

The lack of higher URL rating would also point me in the direction of potentially doing a Tier 2 link building campaign to up the overall authority of a lot of the links the site already has.

ECommerce Keyword Research

The big difference when doing research for ECommerce SEO is buyer intent. A keyword such as “buy raw dog food” (as an example) in comparison to just “raw dog food” will likely have a lot less competition than the main keyword and a much higher conversion rate, this means it’s a lot more effective from an ROI standpoint to rank for “buy raw dog food” than it is targeting the latter. These kinds of keywords will also lead to additional positive metrics like much lower bounce rate for your site, which can also have a knock on effect.

Getting Keyword Data

We do keyword research on a page by page basis, and then compare the keyword sheets we build vs each other to make sure we don’t have any cannibalization going on when we begin targeting keywords en masse.

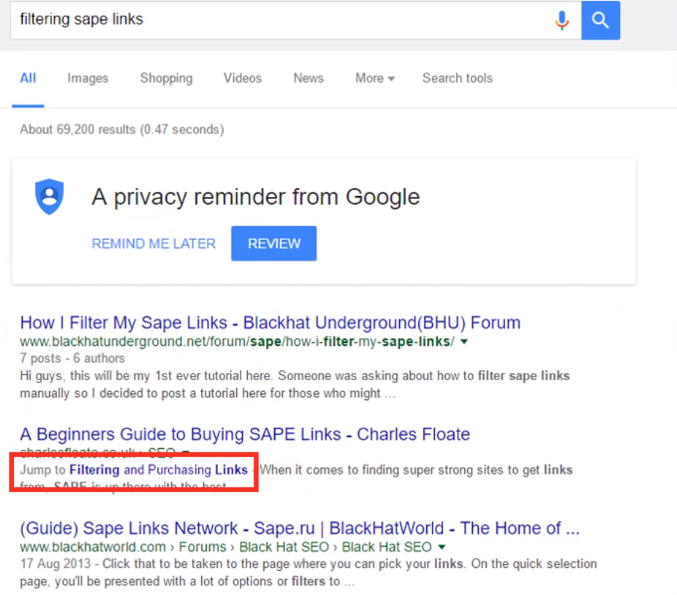

If you have a pre-existing site that already ranks then great, we can simply run the site through Ahrefs and export the organic positions for the page you’re wanting to do keyword research for –

Once you’ve done this you’re going to want to pick several of the biggest keywords from this document and run them through keyword explorer. If you’re using a brand new site, then you just want to use common sense around the main keyword’s you’d expect and start running them through instead. The latter, you’ll likely want to run several variations rather than just a few for an existing site.

Make sure you click the orange update button if it’s presented to you –

This just means you’re getting the most accurate data possible.

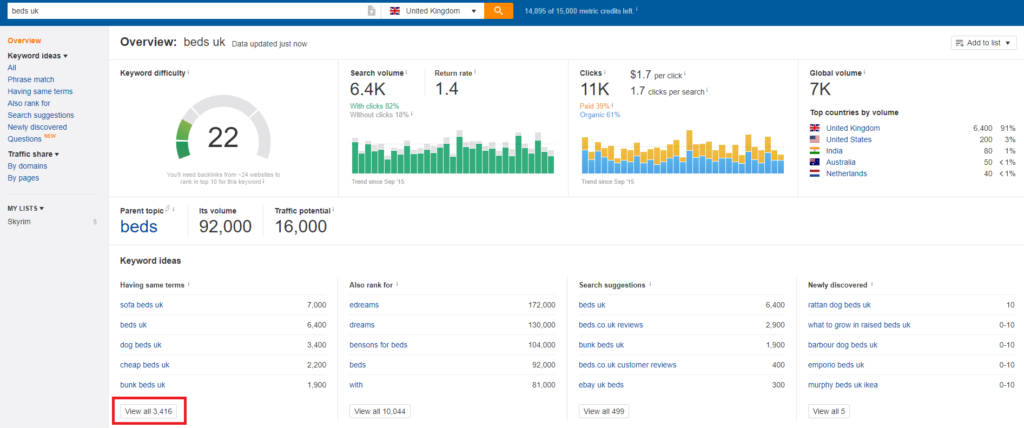

As an example above, I’ll be running “beds uk” through Ahrefs, then go to the phrase match section of the explorer –

Once you’ve got this massive list in place, you’re going to want to export it to Excel.

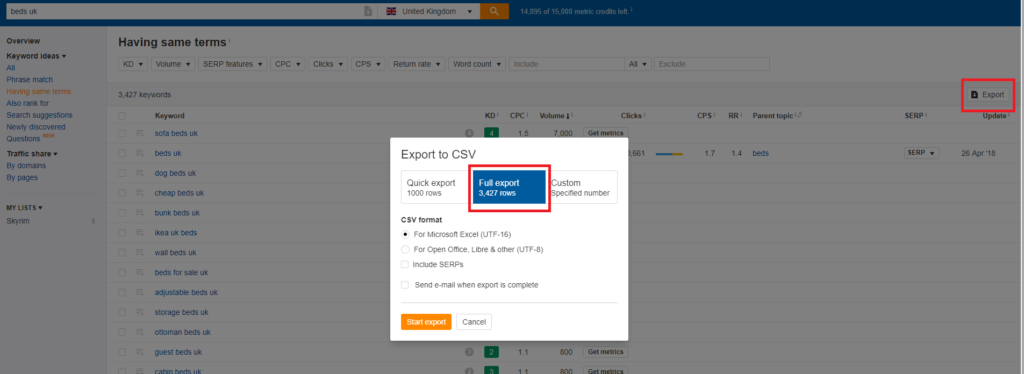

Just click “Export” in the top right of the page and click “Full export” –

This will give you every keyword Ahrefs has found related for this specific term.

You want to do the exact same for several other keyword ideas as well, e.g. if we were trying to get keyword data for the above beds page.. We could also run “beds online” or “beds sale” through keyword explorer as well.

Analytics & Webmaster Tools

For this part of the tutorial, you’re going to want to configure search console to be linked into analytics. This gives you all that juicy keyword data you get in the search console that you don’t get (anymore) in analytics. Here’s a tutorial on how to do this.

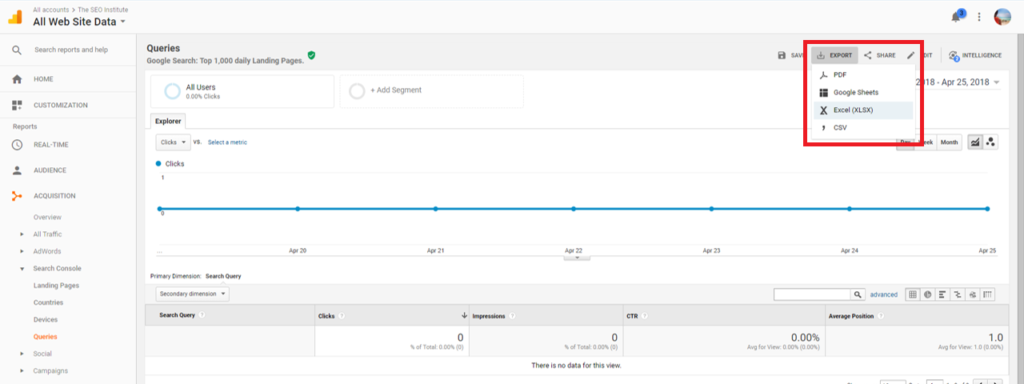

You’re going to want to then go into Analytics and head to Acquisition > Search Console > Queries.

This will give you a populated list from the search console data you’d have on queries. I’ve just used the SEO Institue site (Which hasn’t got any organic traffic as of writing this yet) as an example. You’re going to want to click Export and either go with Excel (Which is what I use to format data) or Google Sheets –

This will give you all the data search console has for the specified period. I’d recommend getting at least a month’s worth of data to export this. This will be a separate keyword sheet from your master sheet, as you’ll need to split the related keywords to the specified pages manually.

I recommend sorting by impressions rather than clicks, and using your rank tracking tool to see the current position you have for that keyword, and optimizing for it accordingly.

Formatting Keyword Data

Once you have your sheet for your page, you’re going to want to format your data into a much more actionable spreadsheet.

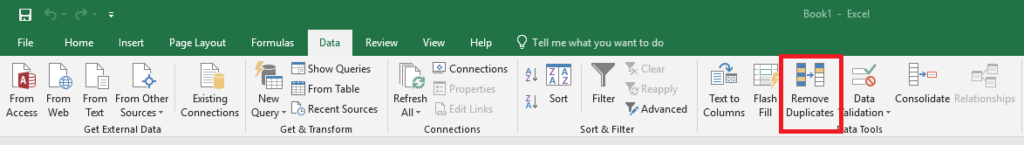

First, you’re going to want to remove all duplicates in your spreadsheet. It’s super easy, just control+A to select the every row in the spreadsheet then go to Data and click “Remove Duplicates” –

Make sure every row is selected and you select “My Data Has Headers” so you don’t accidentally delete any of your headers by mistake.

Once you’ve done that, you want to remove any of the unnecessary things Ahrefs includes that you don’t need.

You may want to keep some of this data, it’s completely up to preference, but I delete –

- Country – Unless you’re looking to do international SEO, you’ll already know what target country you put in.

- Difficulty – Gauging difficulty via any sort of automated metric is a bad idea. Not to mention that the only way Ahrefs measure their keyword difficulty score is the backlinks pointing to a site, and not the 100 other OnPage or internal factors that play a massive role in actual keyword difficulty.

- Clicks – This is super inaccurate and includes PPC data, which we don’t care about when it comes to SEO.

- CPS

- Return Rate

- Parent Keyword – We’ll already have this in our list anyway.

- Last Updated – That’s why we click the big orange button in the first place.

- SERP Features – This one is 50/50, if you want to locate which keywords have knowledge graph’s so you can try to steal that spot then that’s totally fine but you don’t need to know about shopping results most of the time.

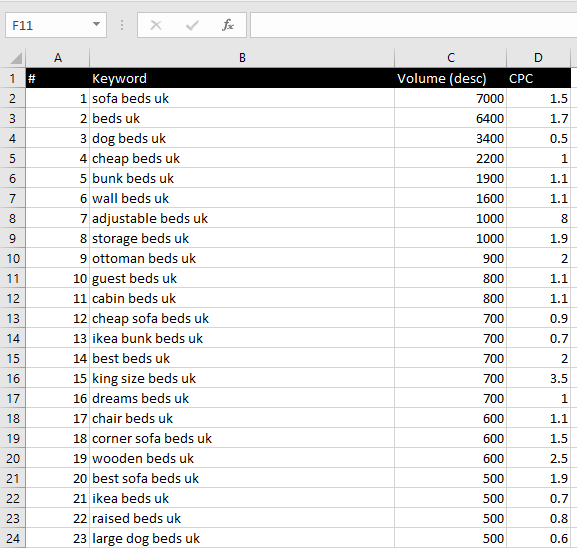

Your spreadsheet after doing this should look something like this –

You’re going to want to do the exact same thing for all of the spreadsheet’s you’ve compiled and then copy/paste all the data into one master spreadsheet and again, run the remove duplicates feature to make sure you’re left with unique keywords across the board.

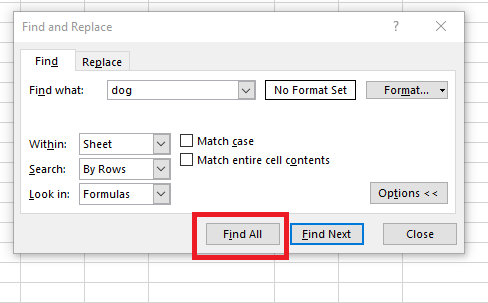

Next, you’re going to want to remove any non-relevant and branded keywords. Super easy, just note down all of these – As an example from the above, we’re going to want to remove any keywords related to “ikea”, “dog”, “dreams” and so on.

Just Cntrl+A again to select the entire spreadsheet data. Then press Cntrl+F and input the keywords one by one you want to remove –

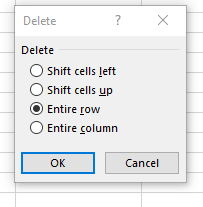

Click “Find All”, it’ll then show you a line by line box of all of them. Click the “Close” box on “Find and Replace” then click Cntrl – (That’s the control key, and then the minus key) and click

Click “Entire Row” and press OK. You’ll want to repeat the above process for every non-relevant keyword. After we did this

Now you’ve got your master list of likely hundreds of target keywords. I like to copy/paste this master list into 2 other sheets in the same Excel document, and sort one by highest monthly searches and the other by highest CPC – This gives you 2 different points.. The highest search keywords and the most expensive keywords people are willing to throw ad money at.

I find the high CPC costs are really beneficial in finding hidden gems in keywords that can have insane revenue gains.

Buyer Intent Keywords

As I said at the start, the #1 thing when analyzing your keywords for ECommerce is buyer intent. These keywords see lower bounce rates, higher conversion rates and more profitability.

It’s a good idea to further split down your master sheet by finding all keywords with “Buy”, “Sale”, “Cheap”, “Affordable” and so on.. Then sorting by monthly searches and using your business instinct to decipher which keywords would be the most beneficial.

Implementing Your Findings

Once you have your keyword research for your target page, you’ll want to start optimizing the pages themselves around them.

I’ll be covering exactly how to do this in the OnPage and link building sections below. These keyword sheets are the foundation for your campaign.

Creating Content For Non-Buyer Keywords

You’ll find a lot of “Best”, “Review” etc style keywords when doing research for ECommerce sites. A lot of agencies and SEOs throw these away as they can’t optimize current pages, but in my opinion this is dumb as hell.

We often export these keywords into their own lists and plan content pieces around them. You can even use these content pieces to power up the main category pages you’re trying to rank for.

As an example, for our beds page, we can look at creating a content piece around “Best Beds” –

- best beds – 1,100 MS

- best beds uk – 900 MS

- best price beds – 500 MS

- best beds 2018 – 200 MS

- best beds to buy – 90 MS

Then we just fill the page up with our best quality/highest profit margin products all on site. Much better way than putting in affiliate links and getting hammered by Google for doing so, as well. These internal links can also pass authority/juice to the actual product pages which can also send trickles of organic traffic through to the site.

You’ll often find a lot of the competing pages for these terms have next to no referring domains, and tend to be short content pieces. Meaning it’s often much easier to rank these kinds of pieces of content than it is ranking the main category page.

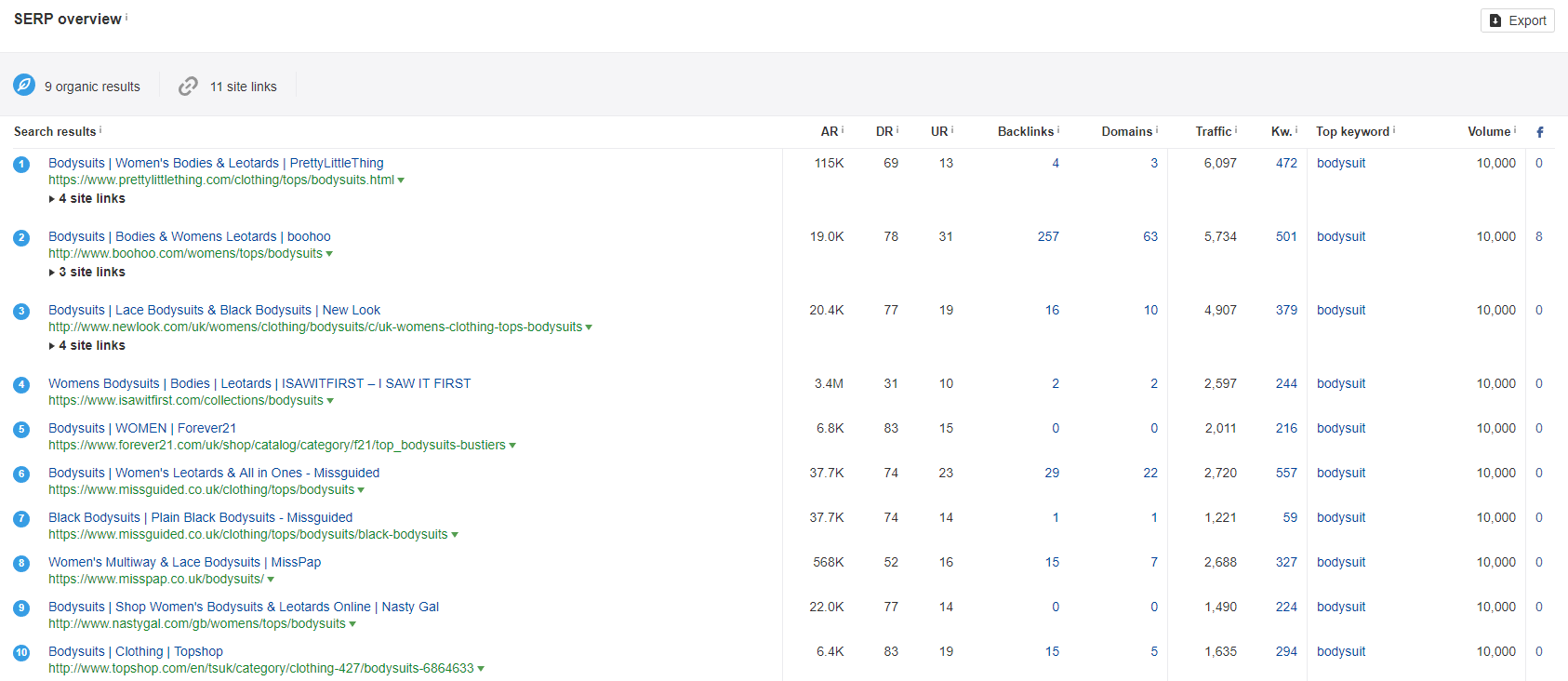

To gauge how big a piece of content you’ll want to create and how many links you’ll need to ideally outrank the competing pages on the SERP, you’ll want to first get the top 3 pieces of content for the keyword, e.g. for this piece they’d be –

- http://www.independent.co.uk/extras/indybest/house-garden/bedroom/best-beds-frames-for-mattress-that-dont-squeak-reviews-a8221161.html

- http://www.expertreviews.co.uk/health-and-grooming/1406305/best-beds-2018-our-pick-of-the-best-single-double-and-king-sized-beds

- https://www.telegraph.co.uk/property/interiors/10-best-beds/

These pages are all between 1,000 and 2,600 words of content. You can either copy/paste the content into Word and check the word count there, or use this tool to check the word count of a web page.

I’d likely create a 3,000 word piece with well optimized images etc.. to be the longest (and best) piece of content in the SERPs.

Looking at the link profiles of these pages, they all have none or 1 or 2 referring domains, most of which are not super powerful anyway. I’d build 3 contextual links to the page, likely using guest posting.

The above tactic would make our piece the best and most authorative page in the SERPs, and if we were ranking #1 would likely generate the page 2,000 – 3,000 visits/month. Easily converting into a £10k+/Month page for this specific niche.

You can also expand these pieces of content to include various other keywords. As an example, on this piece above we used we also found keywords such as –

- best sofa beds – 600 MS

- best bunk beds – 300 MS

- bed reviews – 150 MS

You can either have these on your main piece and use a table of contents, using schema to create jump to’s in the SERPs like I did with this site –

Alternatively, you could just create separate posts around these keywords and use them all as supporting content for your main category page. Though obviously this requires more time and money spent on creating more pages and building more links across them.

Like I said above, we can also use these pieces of content as supporting content for our main category page. As an example:

If our main category page is domain.com/beds/ We can simply do domain.com/beds/best-beds-uk/ and internally link this piece of content back to the main category page as well. This passes topical authority up the URL hierarchy and allows us to use exact or partial match internal anchors back to our main category page.

Make sure if you’re doing the above style to allow trailing slashes on your category pages.

ECommerce OnPage SEO

Now that we’ve done all of our initial work and formatted an idea of the site, we want to start doing the actual work to implement our targeted OnPage SEO campaign. We almost always start with OnPage before link building, as it tends to offer the quickest wins in SEO right now, and most ECommerce site have a bunch of pre-existing problems. Links at the moment can sometimes take 2 – 6 weeks to take full effect.

HTTPS / SSL Certificates

Any and every ECommerce store in 2018 should be using some form of an SSL certificate. Not just to keep your customers safe from man in the middle attacks when purchasing on your website, but also because we’ve seen direct correlation between rankings (especially for buyer intent related keywords) between SSL’d and non-SSL’d sites.

EV SSL seems to also hold more direct ranking value than a standard SSL certificate does, because EV directly links a website to a real world company. Giving Google an even stronger sense of trust in a website.

Meta Title Optimization

A lot of SEOs recommend doing CRO based meta titles or using generic meta titles like the big brands do. I think this is BS though.. Most of the pages that you’re going to be optimizing for these meta titles – Categories and product pages etc.. Won’t have many links going to them, and you likely aren’t going to be working with a huge brand that has the authority to rank regardless.

Instead, we’re going to want to keyword optimize our meta titles. Google still takes titles into account when ranking your site, and we’ve seen fantastic boosts JUST from re-working the titles. As an example, we recently changed a meta title on our client’s site to be optimized around several keywords (and there variants) and saw gains rises from the bottom of page one to top #5 for keywords ranging from 3k to 18k monthly searches.

I’ll be using Wayfair UK as an example, specifically their beds page: https://www.wayfair.co.uk/furniture/cat/beds-c224687.html

The current meta title is: Beds | Wayfair.co.uk

You should be using the keyword research data you pulled in before, but I’ll also give you an example of how you can optimize meta titles very quickly here.

I’ll run it through Ahrefs to see what keywords the page is currently ranking for –

As you can see, it’s already ranking really well for a ton of terms. Including #1 for “beds”.

However, there are several keywords related to “Online”, “Sale”, “Buy” and “UK” that we could better optimize the meta title for.

I’d go with a meta title like:

Beds Online Sale At Wayfair UK

This incorporates the above keywords and the sheer authority of the page will likely knock us up a few spots from those #4 – #8 positions. The combined MS of those keywords (just in the above screenie) is 9,000.. That’s a huge amount of additional traffic to a page with an average sale price of over £200.

We could also optimize the content at the bottom of the page itself to incorporate the “Buy” keywords, and the “Shop”/”Store” keywords as well. We’ll save how to optimize content around this for the next chapter.

Content Optimization

A lot of clients we take on either have very small amounts of content on their money pages, or just none at all. I highly recommend formatting content onto your category pages, especially if you’re competing vs. big brands.

I’ll split this content optimization into the several types of content a traditional ECommerce site would have, as all of these will require a different type of optimization.

Optimizing Category Pages

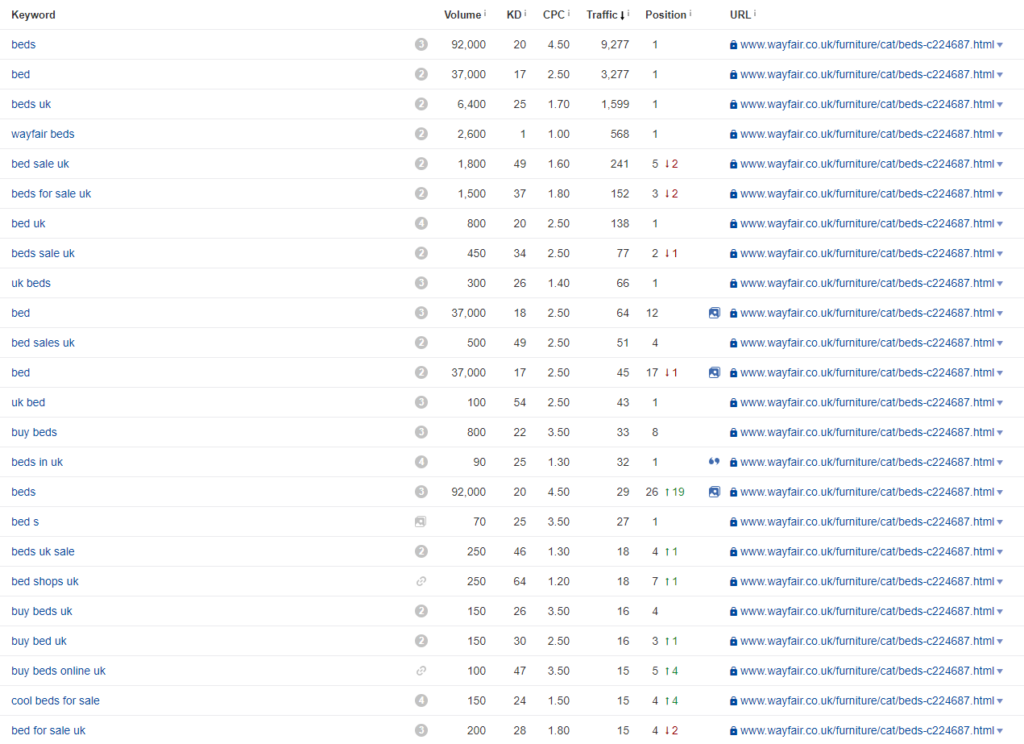

I prefer formatting by category pages with a small bit of content at the top, this is generally heavily keyword optimized and uses internal links that are friendly to the user more than the search engine. Whilst putting a much larger guide at the bottom of the page.

This is my standard setup for a category page –

Note: I didn’t include the “Related Products” tab that a lot of companies have in this layout. You can either put this below or above the large description.

You want the H1 at the top of the page to be the category name. e.g. if it’s the beds page, just make your page beds – Unless you’re a smaller or newer site looking to take some SERP realty quickly, in that case I recommend trying to use a keyword optimized H1 such as “Buy Beds Online” ~ 700 UK MS.

Note: Never use more than one H1 per page.

The actual content of the small description you’re not going to want to use any further headings, just give a brief description about the category page and products with some internal links back to other pages that are relevant for your user.

Inside the larger category description at the bottom, I recommend using a differing keyword than your H1 at the top of the start of the content for your first H2. Underneath this I recommend giving it a further, small 50-100 word description. You then want to do blocks of 2-3 paragraphs with varying H2’s focused around both keywords and user friendly content. As an example, I might do:

H2 #1 (Large Cat Description) – Bed Store UK

With a small description about our online bed store.

H2 #2 (Large Cat Description) – Different Types of Beds

With some information on the different types of beds.

And so on.. This covers both keywords you’re trying to rank for and offers users who actually read the page some information that may help their purchase.

Note: You can use “Read More” buttons, a lot of big sites do this, including Wayfair. Just make sure once it’s indexed that you check the plain text version of the Google cache’d copy of your page to make sure Googlebot is actually indexing the content. I recommend only using these content hiding buttons on the bottom piece of content rather than the top, to negate any sort of algorithmic cloaking penalties Google may impose on the site.

Optimizing Product Pages

You want to make sure that you’re optimizing your product pages around conversion rate optimization rather than SEO, but it’s always a good idea to export an entire site’s product list and run the product names through a keyword research tool – Just to see if any specific product names have there own search volume and as such, you can optimize the product page around those, and even potentially link build to them.

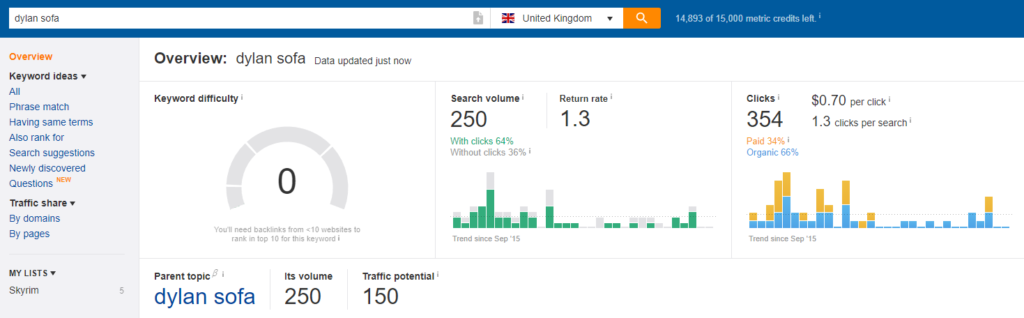

An example of this would be for the “Dylan Sofa” in the UK, which has 250 monthly searches –

None of the pages ranking have any RD going to them and generally have very small amounts of content. Considering the model can range from £199 – £699, it’s well worth the investment of a link and a small amount of content to get a page ranking for this keyword.

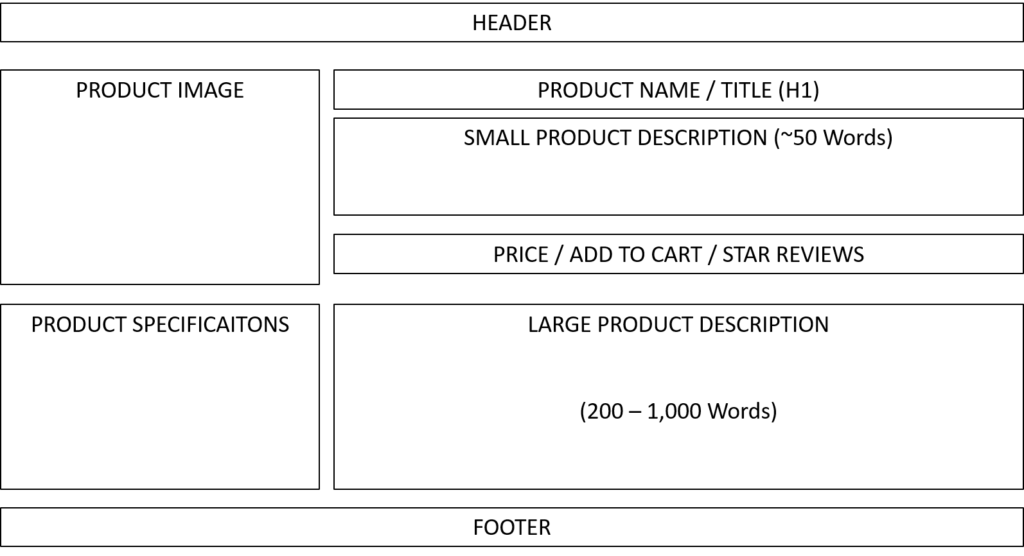

Much like the category pages, I like having a small description at the top of the page with a larger one underneath. This is because Google indexes pages from top to bottom, and the only real thing they can read on a page is the words used.

This is the standard, optimized product layout I use –

Obviously you want this to be focused more around CRO, so if it doesn’t make sense for your business then don’t force SEO on the product pages as they won’t be the main revenue drivers for the site anyway.

We don’t want to be using keyword optimized H2s for the products in the large description like we did with the category pages. This is because we don’t want the product pages cannibalizing the main category pages, which I see happen a lot with niche ECommerce sites. Simply explain the points of the product and use H2s where necessary for the breakdown of what’s included in the product. As an example, if it’s a software product you could break down the different features inside the software that you’d get or if it’s a sofa (couch) you could break down the components and the different factors the user would care about such as the colour scheme of the sofa.

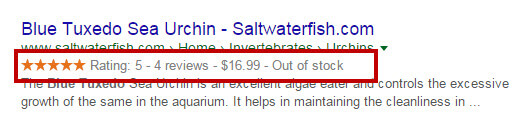

You also want to be using product & offer markup (schema) on your product pages. This allows Google to read specific criteria of your product, and gives you a bigger listing in the SERPs.

Product schema gives Google the name of the product. Offer schema gives Google the price and the currency the price is in for that page, like this –

Image Source: Search Engine Land

Image Source: Search Engine Land

Note: If you’re doing an international site, you’ll have to work with your developers to use different offer markup for each currency that you sell products in for the different versions of those pages.

Optimizing Blog Posts / Content Pieces

When it comes to optimizing your blog posts and content pieces around the site, there are 3 main points I want to cover with every new piece we create or have on the site –

- Keyword Opportunity – Does it offer it’s own ranking potential, or does it at least power up another page on the site as a supporting piece of content.

- Schema – This is something hardly any ECommerce site’s use on their blog posts or content pieces. We want a table of contents, article markup, breadcrumbs and potentially video schema if you have a main video on the piece.

- Internal Linking – What internal linking opportunities are there in the piece, and is it directly correlated to any of the pages we’re currently trying to rank on the site.

To analyze a page for keyword opportunity, you can use Daniel’s keyword model tutorial here –

In terms of schema, it really depends on what kind of a piece you’re doing.

- Table of Contents – If you have more than 3 H2s I recommend using a marked up table of contents. If you’re using WooCommerce you can just use this plugin. If you’re using any other CMS then it’s ideal if you can have your developers create a shortcode which automatically generates a marked up table of contents for all the H2s on a page.

- Breadcrumbs – Most CMSs will have this in place already. You simply want a breadcrumb based navigation at the top of the post, this helps users get around and gives you much nicer looking URLs in the SERPs.

- Article Markup – This helps you get submitted into Google News queries and Google’s indepth articles. All you need for the markup is the title of the post (headline), the featured image you’re using for the post and the date the post was published.

You can use the internal linking guide later on in this post to decide which keywords you should be using to internally link content pieces back to money pages.

Creating Internal Buyers Guides

A lot like the “best beds” piece of content I talked about earlier, we can also do internal buyers guides on the category hierarchy to also be used as a supporting piece of content to the main category page.

All you want to do is create a piece of content called a “Buyers Guide” around the main category page, explaining to the user the different options they’d have for that category. Ideally you want this piece to be above 1,000 words.

You’ll then want to put it under the category URL hierarchy, an example of this would be:

If your category page is example.com/category/ then you want your buyers guide to be under the URL, example.com/category/buyers-guide/ and be internally linking back to the main category with a variant of your main keyword.

Note: If you have several sub-categories, you can also use these buyers guides to further internally link to those categories as well.

As I said above, you’ll want to make sure your categories are using trailing slashes if you’re going to do this.

A great example of how some companies (with insane content budgets) take this to the next level is a UK based company called Bathstore: https://www.bathstore.com/products/baths/buyers-guide – They do several buyers guides for every category they have on the site. Passing a ton of topical authority around each category page. They often don’t even internal link because of the URL hierarchy and breadcrumbs passing the authority back up the chain anyway – I do recommend dropping an internal link though, especially if you’re doing multiple guides, because it allows you to use multiple anchor variations on each guide.

Meta Descriptions

Google doesn’t actually put any ranking weight on meta descriptions anymore, but they can play a (rather small) role in CTR for users looking for the site.

I recommend just using preset templates for your meta descriptions across the site, unless you have a fairly small site and the time to custom write them for every page.

Here is an example preset description you can use for category pages:

Buy {Category Name} Online At {Brand Name}, Find The Best Price Anywhere On {Category Name or Secondary Keyword} With Free Next Day Delivery.

URL Structuring

You always want to try and have the shortest URLs possible in SEO, but in ECommerce it’s often best to split your structure properly.

This means keeping certain pages on a site like blog posts under the /blog/ hierarchy, or keeping sub-categories under there main category, e.g. /beds/sofa-beds/ – If your CMS adds in things like “category”, “product” etc into the URL, then I’d try removing this as it just adds even more length to the title.

For your product categories, you can try to keep them under the same URL structure as your categories e.g. /beds/sofa-beds/3-seater-sofa-bed

Make sure you enable trailing slashes until the last tier of the structure, e.g. the product would be the last tier of the above URL structure, or the specific blog post page would be the last tier of that structure, e.g. /blog/best-beds-2018

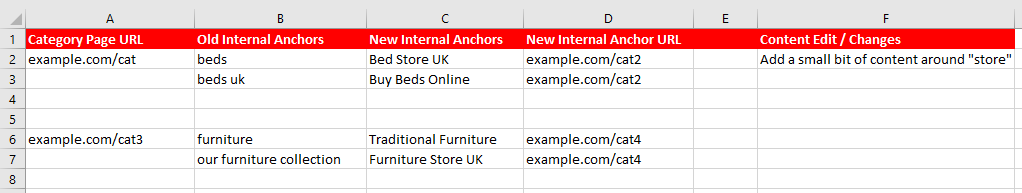

Internal Linking

We’ve seen massive success with re-doing the internal links of an exisiting site. Most sites are way over optimized or way underutilized, using the same keywords every time they link between pages. We use NetPeak Spider ($118 for the year) to give us an idea of the internal links used between pages and the internal PageRank –

Most of the time you’re going to have to manually check pages to see what the current internal links they’re using are and what better alternatives they can use, spreadsheets come in handy for managing all of this.

Bear in mind that Google does not assign much weight to menu, footer and sidebar links so you can for the most part ignore these, NetPeak also includes these as well so just remove them from the spreadsheet when it inserts them in for you.

Your keyword research sheets will come in really handy when picking the different keywords you want to re-do pages for.

Most of the time we begin by re-doing every category page’s internal links one by one. Most of the time sites will have a maximum of 50 – 100 category pages on the site and mostly with not a huge amount of content. If you’re planning on re-doing the content of these category pages then you can choose the internal links then, if not you’ll have to get out a brand new spreadsheet and insert each category page into the first row.

Once you have every category page in the spreadsheet, you’re going to want to go one by one populating the spreadsheet with:

- Old Internal Anchors – Whether you want to delete, keep or change.

- New Internal Anchors – This is where we use our keyword sheet for each page we want to change the internal anchor for.

- Content Edits / Changes – You don’t want to just change 100 pages internal links, it’s a good idea to make small edits or add additional paragraphs here and there to also hit the freshness update and help get these page changes indexed faster. It’s also difficult to get some of the new keywords onto some pages, so making small updates specifically around those new keywords is a good idea.

Your spreadsheet should then look something like this –

Obviously your sheet should be significantly more populated than mine.

If you’re doing client SEO, it may take some small training with the client or their developers to be able to get them to understand implementing these kinds of sheets.

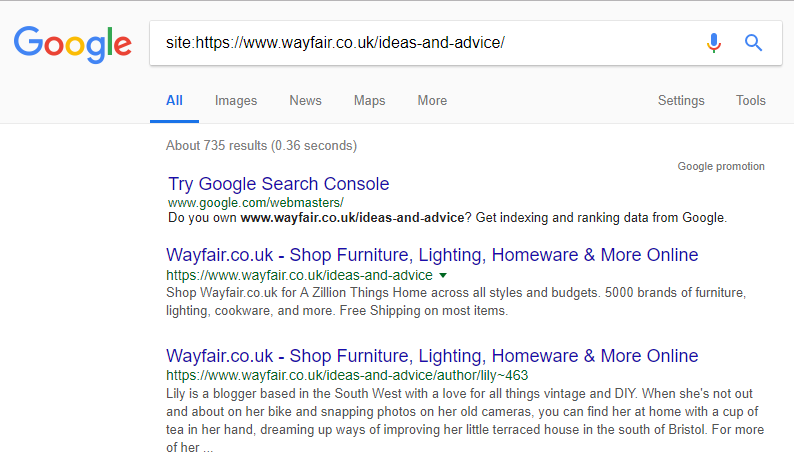

You’re going to want to do the exact same process for blog posts as well, almost all CMSs will put the blog posts under the /blog/ URL but some may do it different, in Wayfair’s case we can just do the Google site operator for their blog URL –

This bring’s back all of the pages on the site for this URL, which gives us an idea of how many blog posts we’re likely going to have to re-work the internal links for – Yes, ECommerce SEO is a lot of work.

You can then simply use your DeepCrawl of Screaming Frog export to start your sheet by removing any URLs that aren’t under the blog directory. Then you’ll need to do the process I explained above all over again.

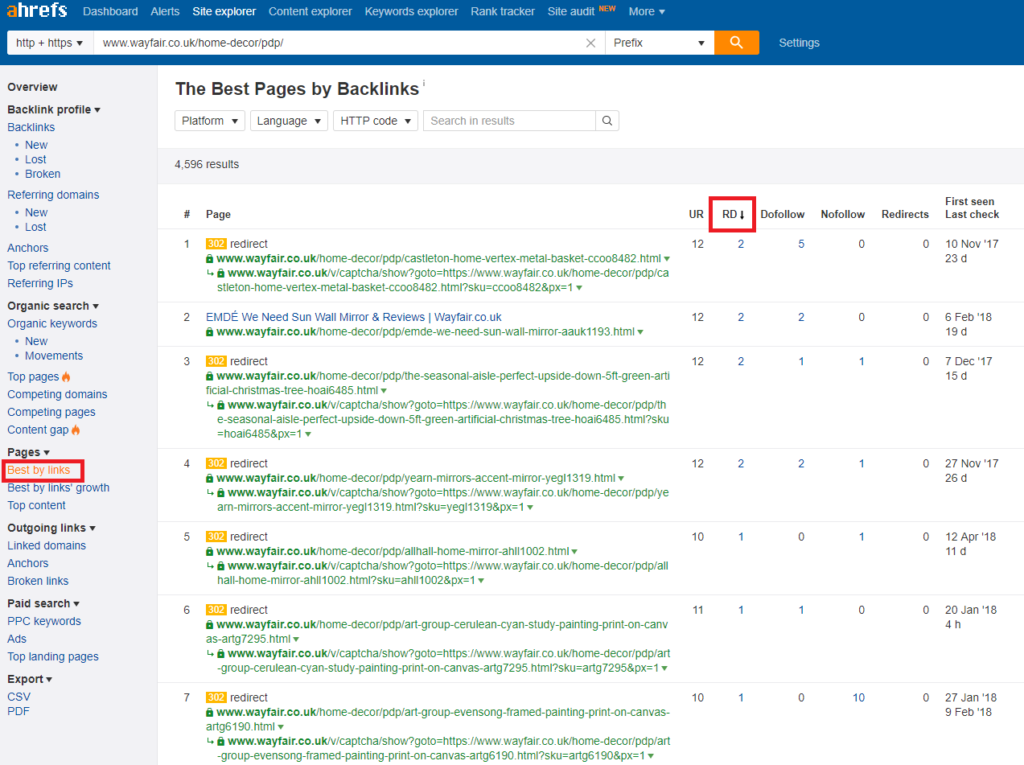

Most product pages don’t use internal linking much, and they don’t tend to hold much internal or external power. It’s worth running the products URL prefix through Ahrefs and sorting by “Best By Links” –

Sort this by RD, not UR and you’ll be able to find pages that have a few links pointed at them which potentially makes them worth internally linking back to some of our relevant money pages from the product’s descriptions.

Homepage Link Sculpting

Most site’s homepages are the most powerful page (in terms of RD/Link Juice) on the site. This means you are missing out on link juice if you aren’t trimming down the fat of too many links and utilizing small snippets of text to get powerful internal, contextual links.

I recommend looking for opportunities to add small snippets of text that won’t hurt the CRO of the homepage and utilizing the juice to optimize for your most profitable keywords.

404 Analysis

404s on ECommerce sites are the bane of all SEOs lives. The cycle of products, the random times developers and clients somehow delete pages and

Content King makes this considerably easier to monitor 404’d pages, but it is a pretty expensive tool.

We like to use 2 site audit scans (Normally DeepCrawl and Screaming Frog) just so we can get the most from different crawlers. You can just use one if you don’t have both. Once you’ve ran the scans you want to export the 404s from them into a spreadsheet.

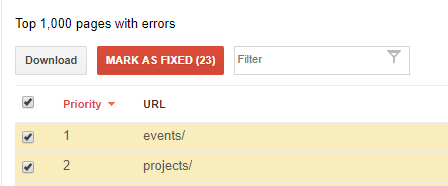

Then you want to go to Webmaster Tools > Crawl > Crawl Errors > Not Found, you should then get a list like this –

Select all and click download.

Now you want to copy and paste all of the 404s that you’ve found into one master sheet. Using the remove duplicates tool I showed you in the keyword research section above, you want to remove any duplicate 404s that the software has found.

This will then give you one huge spreadsheet that you can use to go through manually 301’ing the URLs to relevant pages.

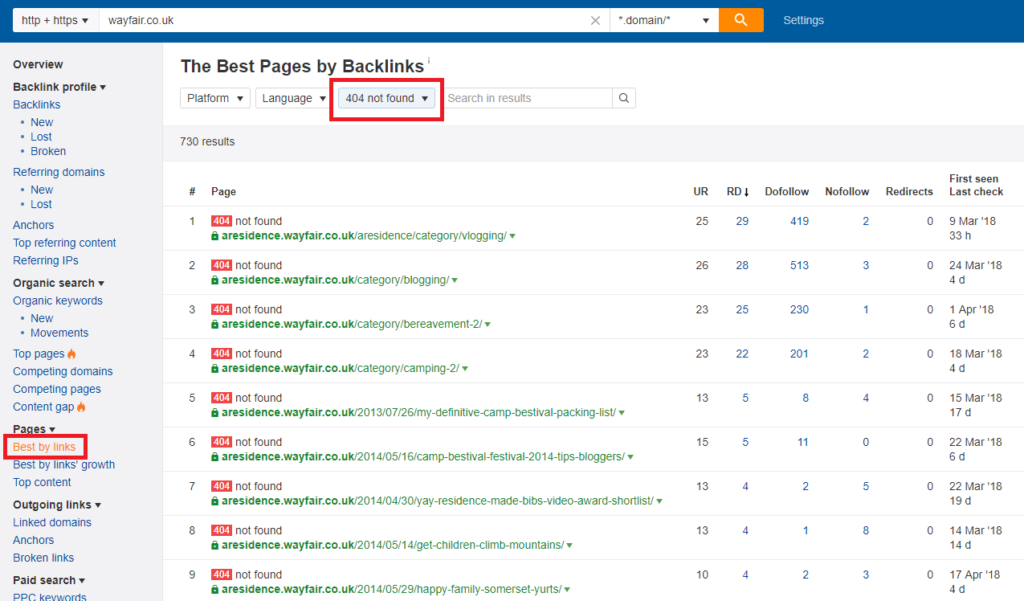

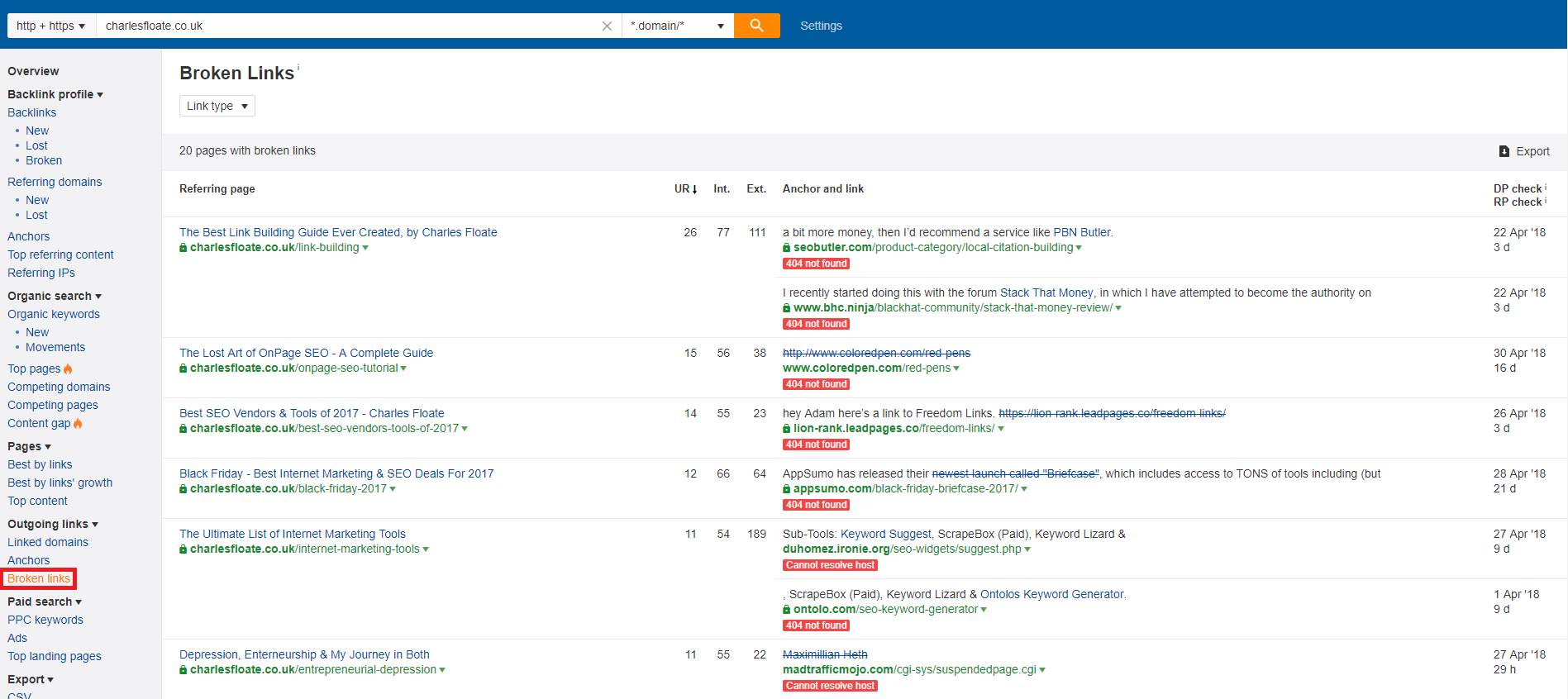

You can also use Ahrefs to find 404’d pages (Much like people do for broken link building) that have external links pointing at them. Just go to the site explorer, click Pages > Best By Links and select the HTTP Code as 404 –

Sort by RD and you’ll be able to find lost link juice across a site.

In Wayfair’s case we’ve managed to find 112 pages that have referring domains pointed at them, with over 300 backlinks spread between them. That’s a lot of lost link juice that we can 301 at relevant pages.

Sitemaps

I always recommend having a HTML version of your sitemap for users to be able to click around, this should be the sitemap that is in your footer and also includes a link (at the bottom of it) back to your XML sitemap/s.

The way you setup your XML sitemaps is going to massively depend on the size of your site. If you’re working with a few hundred pages then you can just have one sitemap covering the entire site’s URLs. However, if you’re working with large sites that have thousands or tens of thousands of pages, then you’re going to want to split this up into sitemaps specifically for there own purpose.

Most of our clients are 5,000+ page websites and have hundreds of blog posts, thousands of products and tens or even hundreds of categories and subcategories. We like to split our sitemaps up into several sub-sitemaps.

- Main Pages – Home, About, Physical Store Pages, Contact us etc..

- Main Categories

- Sub Categories – These are any categories within your main categories, e.g. example.com/beds/sofa-beds/

- Blog Posts – If you’re working with a site in the high hundreds or thousands of blog posts, it might be worth splitting these even further down into category based sitemaps.

The main sitemap itself is just linking to all of these sitemaps.

Make sure if you’re using any sort of automated sitemap building plugin or software that you manually check through the sitemaps – You don’t want paginated cat pages, login/register pages and so on.. included in the sitemaps.

Canonical & Pagination

One of the major problems I see with ECommerce sites is the wrong use or complete lack of use of the canonical tag.

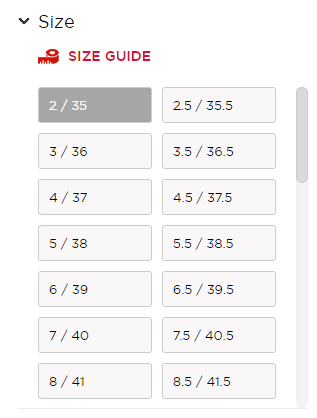

Magento has a problem with a complete lack of using the canonical tag altogether. Out of the box, the filtering options for product category pages in Magento creates new URL parameter pages. Kurt Geiger had this exact problem but recently fixed it by adding the canonical tag to these new URLs. Magento creates the URLs via the filter options on the left hand side of the page –

The URLs end up going from the main URL of https://www.kurtgeiger.com/women/shoes to https://www.kurtgeiger.com/women/shoes?page=1#size/2202f2035

If you’re running Magento and use these sidebar filters, make sure to have your developers fix this.

With a shoe website, this was creating up to 40 additional, duplicate pages which is a massive problem when it comes to crawl budget wastage. It was also indexing a majority of those URLs in Google.

Any URLs that are duplicated pages using URL parameters should be canonicalized back to the first page.

Likewise if you aren’t using infinite scrolling for your category pages, you’ll likely be using different pages for your category pages, e.g. example.com/cat/page1 – These need to both have the canonical tag back to the main page, as well as have pagination correctly setup for the page.

Pagination is pretty easy for category pages, you just want to do the following setup –

- The main cat page should have the rel=”next” link to page 1

- The page along from this should have rel=”prev” back to main cat and rel=”next” to the page onward from this

- The last page should just have rel=”next”

You also want to make sure that you aren’t including any of these pages in your sitemaps, most automated sitemap builders will end up including them but this is another wastage of crawl time.

NoIndex & Crawl Budget Analysis

Not only do you want to check that your site isn’t noindex’ing any valuable pages, but you also want to analyze for pages that you want to noindex to stop crawl wastage and prevent algorithmic thin content penalties.

It’s often a good idea to noindex any privacy policy, thin FAQ, financing etc pages that are very unlikely to have any users actually searching for, or are just a complete waste of Googlebot’s precious time on your site.

The best way to analyze this to combat crawl wastage is using server log analysis, which we’ll cover down below.

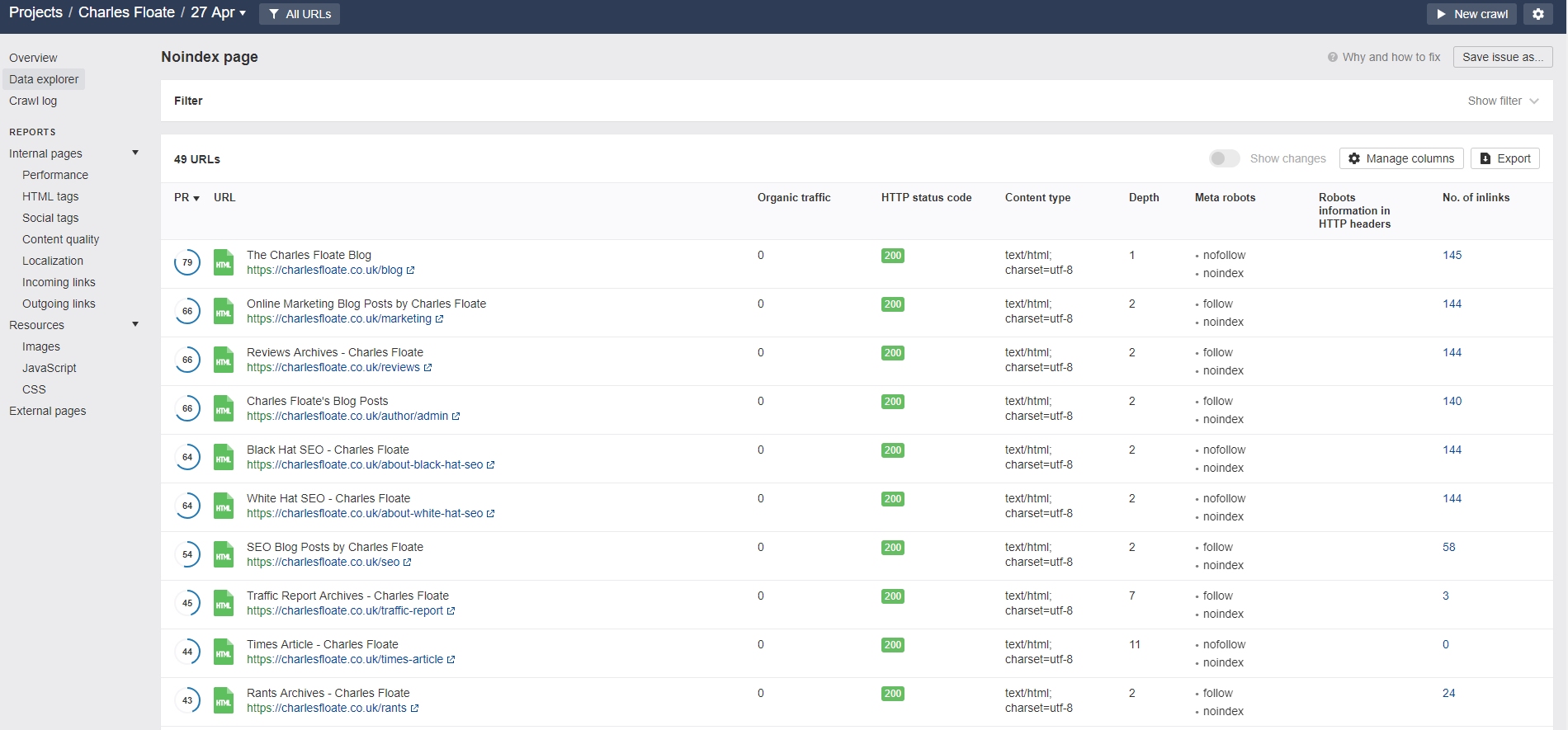

Most OnPage tools like the Ahrefs site audit tool will have built-in noindex page lists, like –

These make for excellent overviews of pages that may be potentially wrongfully noindex’d. We recently discovered several subcategories were noindex’d (for no apparent reason, thanks Shopify) on one of our clients sites. Removing the tag and reindexing the page has seen direct revenue gains from those pages in a matter of weeks.

There is also a fair few other features you can do to optimize your crawl budget and prevent crawl wastage –

Minimize Internal Redirect Chains

Though having multi-layer internal redirects don’t tend to have any loss of link juice, it does add precious load time to bots and makes for fairly easy fixing. You’ll be able to export all the internal redirects from your site an OnPage auditing tool has found, and simply sort by redirects that have more than 2 URLs.

Keep On Top of 404s & Broken Linking

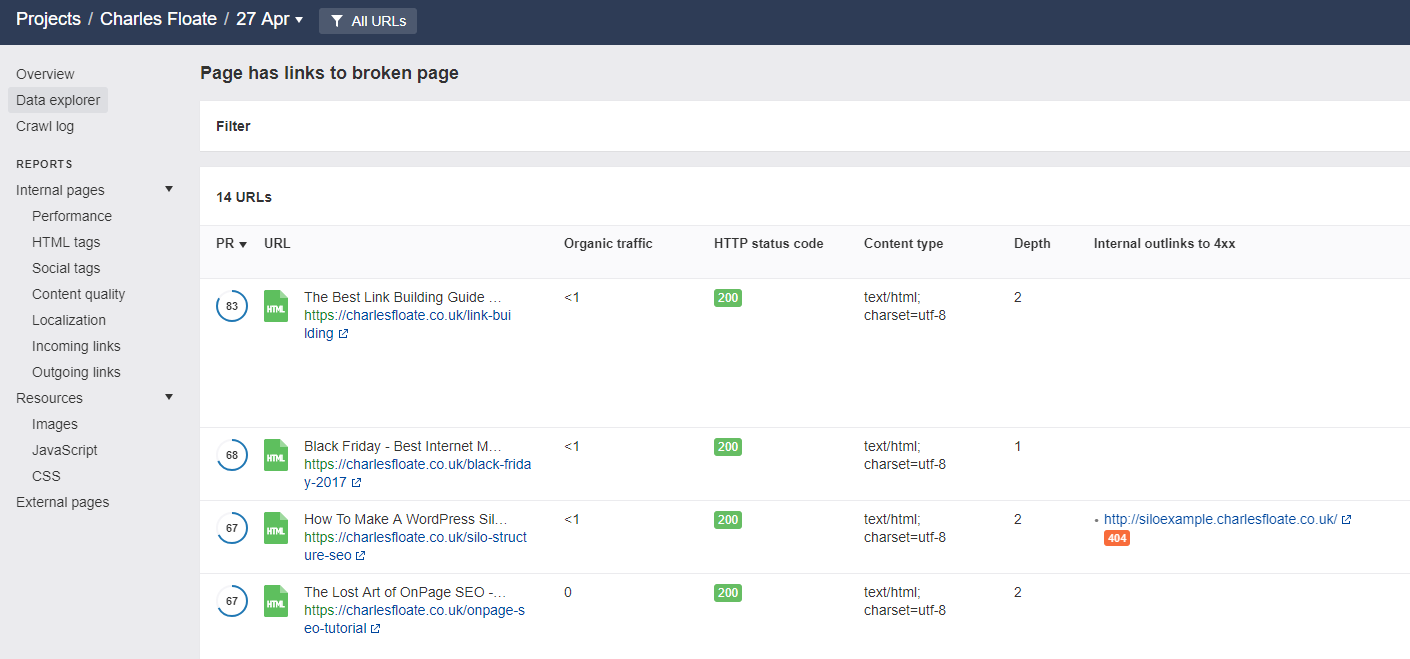

We’ve already covered 404 analysis, but Ahrefs Site Audit tool (and pretty much every other OnPage auditing tool) also monitors for broken internal linking. Send bots through to 404’d internal pages is a loss of internal link juice, and a wastage of crawl budget –

Likewise, you can also check via the Ahrefs site explorer for broken external links that could be re-utilized –

These are both huge sources of crawl wastage.

Speed Optimization

Depending on your CMS and current hosting setups, can massively depend on the types of speed optimization you’re going to need to be doing.

A super easy technique that has come to light recently (hat tip to Andrew Halliay) is switching the HTTP protocol to HTTP2. This loads your site asynchrnous, rather than loading element by element – Which is why you get that horrible waterfall graph on GTMetrix or Pingdom.

We’ve seen load times dropped by in excess of 70% due to this one, fairly simple switch. It does require SSL, though as I said earlier, every ECommerce site should be running an SSL certificate by now.

Kinsta did an excellent guide on how to use HTTP2.

There is also a boat load of other techniques to lower site speed –

- Image Compression – Both server and CMS level image compression, but this massively depends on your setup.

- Minifying Code – HTML, CSS, Javascript etc.. Can all be minified and optimized to be as short as possible, and thus load faster with smaller file sizes.

- Google Tag Manager – If you’re using multiple tracking codes, you can sycronize them all via Google Tag Manager, making external script loading much faster.

- CDNs & Cloud Hosting – You can also leverage content delivery networks (Which load images etc from closer data centers than your main hosting) or cloud hosting which essentially sets your site up on a network of servers and loads from the site from the closest server to your recipient.

Site speed is showing massive correlation to us in the SERPs right now –

It’s no surprise considering Google’s announcement that page load time will directly affect your AdWords quality score, that they’d put site speed as a large factor in the ranking algo. Especially with the move to mobile first indexing, mobile users tend to be very impatient.

Technical OnPage Techniques

There are a lot of small, advanced OnPage bits and pieces that you’ll run into along the way. I’ve covered a couple technical techniques here that can massively benefit your SEO and content campaigns for ECommerce sites.

Server Log Analysis

So firstly thanks for Charles for letting me write this section, let me introduce myself. My name is Andy and I am a technical and Server log specialist. I currently run my own Technical agency focusing on doing technical audits and server log analysis called OnPage Rocks – but I actually was lucky enough to learn my skills and knowledge in house for one of the UK’s top 100 ECommerce sites and in the top 3 in its niche, this site would compete with the big players like Amazon on a daily basis. After a while, we would regularly out rank Amazon specifically for high value keywords and be number 1.

Sorting out the technical side of things was one of the key reasons we ranked so well.

The site was a dream – It’s the size that most SEO’s never get to experience and I was the Head of SEO and PPC when I left the business – So I got the opportunity to pull large data sets to analyze and got to run some cool tests.

I am very much a believer in “you wouldn’t build a million dollar house on quicksand, so don’t try and build a million dollar website on poor foundations” –

This is especially true for large ECommerce sites.

The majority of Server Log Analysis for ECommerce sites isn’t much different to regular sites and I wrote a really good intro to Server Log Analysis on the SEO.Institute site so I don’t want to cover the same things here, if you are unsure of what Server Log analysis is and want to understand the basics, read that post first.

Instead I am going to cover the additional elements of Server Log Analysis for ECommerce Sites and get quite technical and specific.

Where is Googlebot Crawling

The logs is the only accurate place to get this info, Google Search Console doesn’t provide this data. They have a few pretty little graphs which don’t really tell you a lot and they definitely don’t tell you when / where they have crawled.

These are the things you should be looking out for in the Logs:

Category Pages

If you have setup your ECommerce site correctly, the home page will only rank for brand terms, but its your category pages which will drive most of your organic traffic. These will also be your biggest drivers of new visitors to your site.

Making sure Googlebot is crawling these frequently and not finding any errors is key.

Then its sub category pages which are next important, these usually have less search volume than more generic categories, but they are higher converting, so crucial you monitor to make sure Google is frequently hitting these pages and seeing any changes.

How Deep Are They Crawling?

As well as monitoring how often they are crawling your key pages, knowing how deep they go is important.

Do they they hit your homepage and category pages or do they crawl your entire site including terms and conditions pages and product pages.

When Are They Crawling?

As well as how deep and where they are crawling – When, is crucial to ECommerce sites. I am assuming you have set up your site correctly and added product schema onto the pages so its showing in the SERPs, so price, reviews, in stock etc.

Google has to crawl the page to see updates, reviews stars aren’t that important unless you have a product with a negative review in the SERPs and suddenly you have had quite a few positive reviews appear which could boost CTR.

However for pricing and stock levels its quite important as it can affect bounce rate and we know that’s bad for SEO.

If you sell product ‘x’ and its priced at $99.99, but then the price changes to $149.99. Someone seeing that price in the SERPs could be really tempted to purchase, but when they click through they see the true price of $149.99, firstly they are going to bounce back in the SERPs and that can and does lower your rankings but that user perception of your brand has been damaged (through no real fault of your own – you don’t technically mange the SERPs).

Knowing this info is important and why its also important to have the sitemap (we will cover sitemaps later) set up correctly with the last modified date, so Google knows when something has changed.

When is important for content changes, if you update category ‘x’ content on a Monday morning, but Googlebot crawled the page on Sunday and regularly only hits that page once a week, it’s going to be another week before it see’s the changes, if it can see the changes it can’t impact rankings, in turn slowing down your campaign.

Let’s say you update your content on the Thursday before Black Friday – Mention Black Friday in your meta descriptions, titles etc to drive more people to click on your site. If Googlebot doesn’t crawl your site on either the Thursday after the changes have been made or the Friday, it will have been a waste of time as it won’t change in the SERPs and you’ve missed the biggest sales holiday of the year.

Variations of The Same Product

This is a real example, just the name and niche has been changed. Let’s take Luke, he runs an ECommerce site selling office furniture.

He has a desk and has set it up correctly so for the different variations its just one product page with filter options. He sells the desk in two sizes.

1.5 metres and 2 metres long.

The desk comes in three colours

Oak, white and black.

You can also decide on the material of the feet

Either wood or chrome.

Luke thinks he did a great job, he created on specific landing page where he can send all his traffic and try and up sell and convert. It’s nothing new, Amazon and all the big sites do this.

However what Luke didn’t realise is that by clicking on the options, it actually modified the url slightly, by either adding a ? or something else onto the end of the url. So instead of him have 1 page which Google needs to crawl in fact it there are quite a lot of different URLs Googlebot is crawling. I have seen one example of where the url in the browser didn’t change but Googlebot was still being done as it was done using Javascript – Only the server logs could of told us about these additional URLs, and in turn we could then get them fixed.

Faceted Navigation

A question I often get asked – is faceted Navigation good for SEO?

There are quite a few good reasons to open up faceted Navigation and create specific landing pages, the problem comes when you open up faceted navigation to Googlebot (and I guess other bots).

You have a crawl budget and opening up too much too quickly can mean Googlebot can spend more time crawling and finding these new pages and not your current pages, which has the potential to impact your current rankings.

Yes it does, I should know I’ve made this mistake before.

What you need to do is plan ahead and do it in stages.

You need to be closely monitoring your logs, I have a tool, which spits my logs out daily into Google Bigquery and then I have automated reports which runs of the back of this – I closely monitor my logs and spot spikes and dips.

While you don’t need to go to this extreme, you do need to get the logs daily and create something similar in excel.

You already know which pages they aren’t crawling and if Google isn’t already crawling above 90% of your site frequently, I wouldn’t think about faceted navigation at the moment.

So Googlebot is crawling most of your site and your thinking of opening up your faceted navigation, start slowly identify the key pages you want to get indexed first and release these, let Googlebot do its thing and crawl and index these pages. Once you have monitored and Googlebot is still crawling the other pages as frequently as well as your new pages, open up more.

PLEASE DO NOT just open up all your new faceted navigation at once – Your rankings potentially will be impacted.

Faceted Navigation is great when you are a big brand with a huge crawl budget and huge Domain Authority (I am talking about Google’s internal version and not the Moz metric) as Google is likely to find these additional pages and with your authority, allow them to rank quite easily.

If your Johns Furniture store which is very new and no real authority, then your opening up faceted navigation can hurt your overall rankings – It’s not for all sites, yes it can bring huge benefits, but it can also affect your other pages.

Mobile Bot vs Desktop Bot

Google claims that they will alert webmasters via Google Search Console when they are part of the new mobile index, I have heard of very few people actually getting this message, however John Mueller said publicly that the best way to know when you are now part of the new mobile index is when Google Mobile Bot crawls more frequently that Google Desktop bot. He said traditionally it will be 80/20, so 80% of activity will be Desktop Bot and 20% mobile bot and that once you are part of the mobile index that split will reverse and will be 20% desktop 80% mobile.

From all the sites I monitor, its more likely to be about 65% when you are part of the mobile index, but you can definitely see a switch in your log data (another reason I chart this so I can spot it).

For most of these sites I haven’t got the message in GSC to say that the site is now part of the mobile first index, so don’t rely upon that method.

You could spend a lot of resources trying to make your site more mobile friendly when its already part of the index, just because you haven’t received a message from Google.

Sitemaps

This is more important on large ECommerce sites, if you have 100 products then this section isn’t going to be relevant, but for large sites which have products coming in / out of stock regularly, then making sure the sitemaps are up to date and correct is important.

Log data will help here – Firstly you can see how frequently google is hitting each sitemap, again I am assuming your a large site that has needed to split up your sitemaps. Even if your not that huge, I still recommend it, product pages sitemap, category pages sitemap, blog pages sitemap etc – This way it’s easier to maintain and see when Google crawls each part – Plus it helps for when you start scaling it’s one things already done.

These are many great reasons, all sites should be analysing their logs, but the biggest sites on the internet tend to be ECommerce websites and have a lot more issues than majority of webmasters and its crucial you get to understand your logs.

If you have any specific questions, relating to your logs, feel free to ask me anything, I am always willing to help.

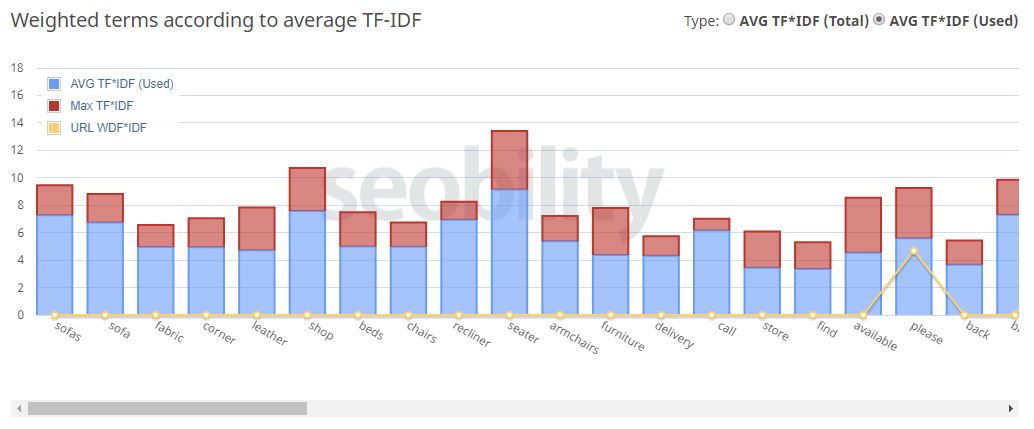

TF*IDF

This is one of my favourite tactics, and one that is grossly under utilized and appreciated in the SEO community.

Essentially, TF*IDF is the reverse engineering of the Word2Vec algorithm that Google directly attributed to it’s use in RankBrain’s machine learning analysis of page content.

Another shout out to Nick Eubanks, he did an epic post here going into much more detail on the subject than I will.

RankBrain is the evolution of keyword density, it looks at keyword usage by humans too and the correlation between the distances of those keywords topically.

As an example, if we were trying to rank the Wayfair UK Sofa page ( https://www.wayfair.co.uk/furniture/cat/sofas-sofa-beds-c493379.html ) around the keyword “sofas uk”, then we could analyze the page we own as well as the ~100 or so pages that Google gives us for keyword usage –

We can then use this data to optimize our own content around the various keyword usage of the top 100 ranking pages. Even small notes like “sofas” has more usage than “sofa” and “shop” has much more usage than “store” can give us a significant hand with our content and OnPage optimization around keyword groups.

Link Building For ECommerce Sites

ECommerce link building is trickier, in the sense that it is harder to be able to get links to category and product pages, than it is to link building to review articles or large blog posts like on affiliate sites. It’s far from impossible however, and there are several techniques you can use to make things easier to get links directly to these pages.

I’ll also be showing you tactics you can use to link build to other pages that in turn help rank your main page.

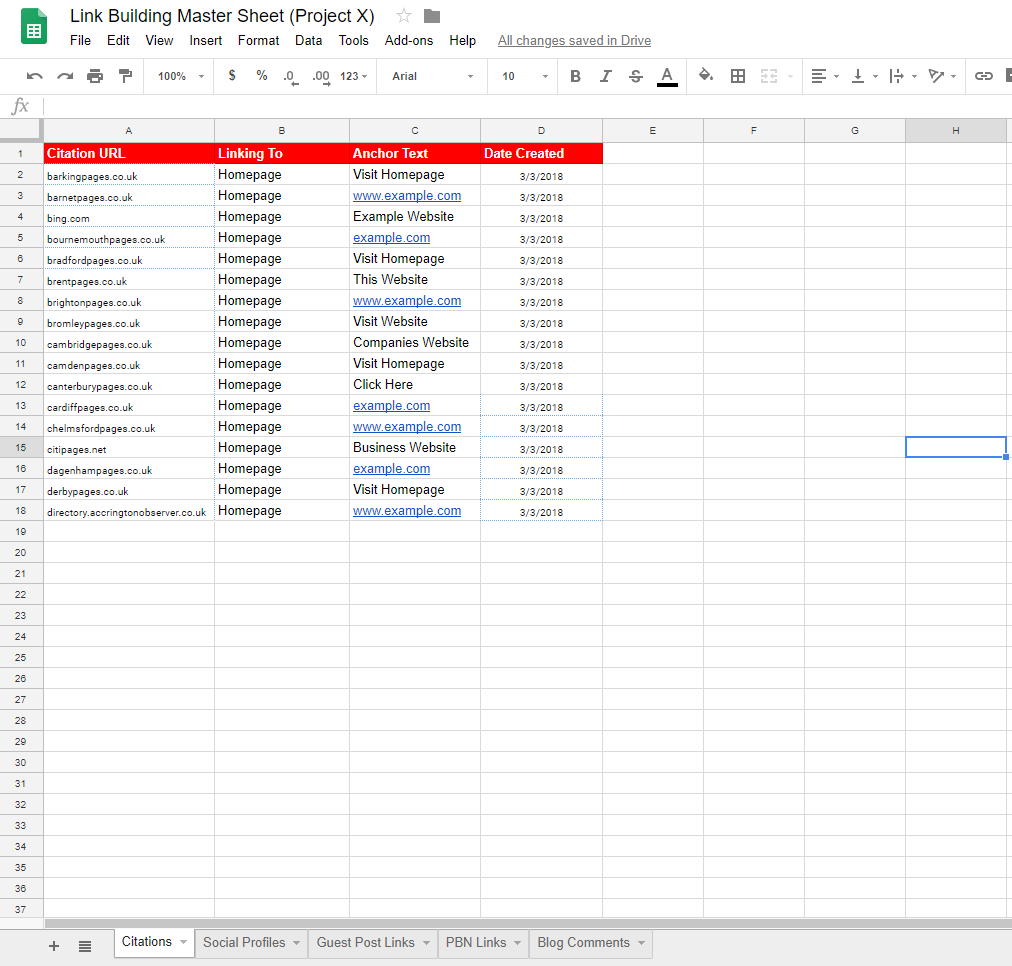

Link Tracking Master Sheet

When it comes to link building on this kind of scale and often for a very valuable site, it’s always a good idea to keep track of all the links you build in a campaign.

We do this via having a link building master sheet – Even if we don’t have the actual URL of the link built, we still put in the URL and anchor we built it to.

This makes it super easy to collate anchor clouds for specific pages, track the number of links to specific pages, link velocity etc.. Here’s a small example I created of what a spreadsheet like this would look like –

Obviously you want to edit and cater around your specific needs when it comes to tracking and link types built.

You can also use Excel to further generate the % of times each anchor text has been used –

Image Source: AbleBits Tutorial

This is much more reliable (if you have started a campaign from fresh, or collated data into this sheet from multiple sources) than any Majestic or Ahrefs word clouds/percentages too, because it accounts for every link you have purposefully built at the site or found in your link research.

Anchor Text

Anchor text in ECommerce is highly important. It’s likely that you’ll have hundreds or even thousands of keywords on any specific page. We like to build our anchor text profiles around that of highly ranking, competing sites and mix in our own flavour too.

If we were targeting the Wayfair Beds page, and we were building 10 links, I’d likely go with the following anchors –

- Wayfair Beds

- beds | wayfair.co.uk

- Beds UK

- Beds on Wayfair

- Wayfair

- Wayfair.co.uk

- www.wayfair.co.uk/furniture/cat/beds-c224687.html

- buy beds here

- Beds Online

- Beds At Wayfair

Note that we use the brand directly to category pages as well, this is totally fine and actually encouraged.. A lot of the big sites have purely branded anchors to inner category pages.

I also like to use as many different anchors as possible, rather than repeating the same anchor multiple times.. It makes for a much more diverse and natural looking anchor text profile.

We recently re-worked the content on a page on a client site and added just 1 link to it, we saw a gigantic jump in rankings and traffic for the page mainly down to our content optimization and specialized anchor choice –

We didn’t go with the specific keyword itself, but instead targeted the “UK” variant of the main keyword in our anchor and built the link from a .uk TLD.. Which saw all of our UK phrases jump up massively, which were significantly less competitive than the main keyword and it’s variants.

Content / Link Asset Creation

A lot of big sites do this, but forget to internally link the pieces back to relevant categories or products on the page. This misses out on a lot of link juice. If you’re doing any sort of a content campaign for the site itself, then I highly recommend looking at the internal linking between these pages and the pages you’re actively trying to rank.

The premise for this technique is you create an asset that will both naturally attract links and is significantly easier to build links to than, say, a beds category page. This can be a history piece, an interactive piece of content, a tool, a blog post, an infographic, a video or any number of things. It just has to be a good enough reason to get sites to link to it.

We often use Mention Me by Gareth Simpson, as they have an internal content service that is designed to make landing pages and informational content pieces by British writers.

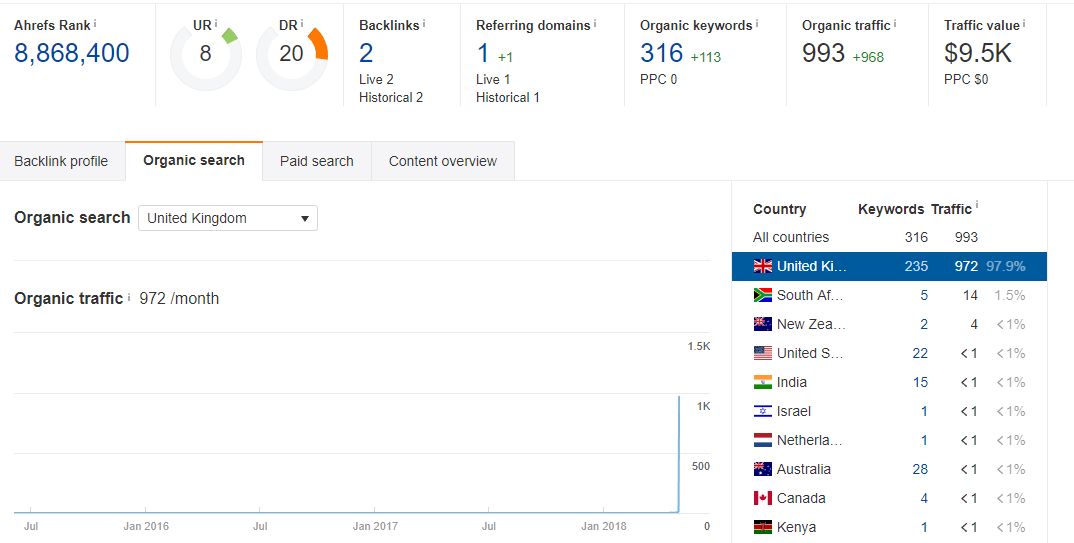

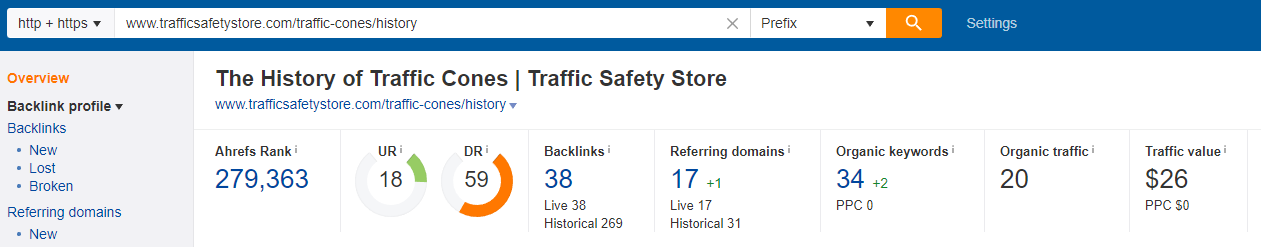

A perfect example of such a piece of content (Minus the fact that it lacks an internal link, though the specific URL hierarchy helps it pass the topical authority it needs to anyway) is a piece on Traffic Safety Store (A site SEO’d by Nick Eubanks, who is an excellent SEO at ECommerce and I recommend you check out his companies blog here) about the history of traffic cones –

https://www.trafficsafetystore.com/traffic-cones/history

This piece is directly topically relevant to the category page it’s supporting and encompasses the URL topical authority style we talked about earlier.

It’s an infographic supported by physical written content, which is a nice touch as a lot of companies simply insert an infographic image on a page but forget to put any sort of content around it.

It’s no surprise that it’s “attracted” (Though most seem manufactured to the trained SEO eye) links from the likes of Wikipedia, large infographic submission/blog sites and relevant industry sites –

It’s even managed to rank for a few keywords of it’s own.

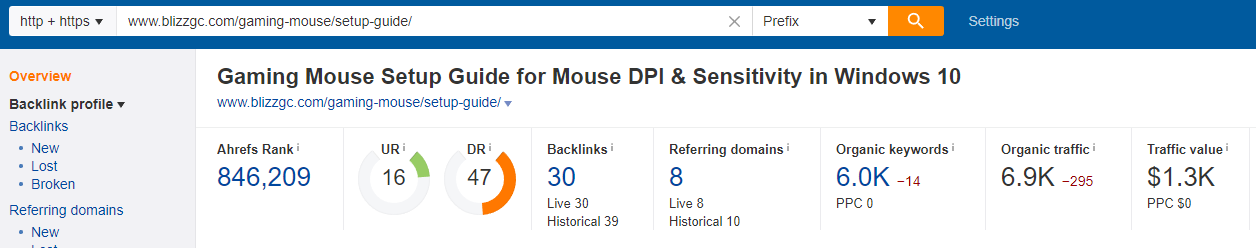

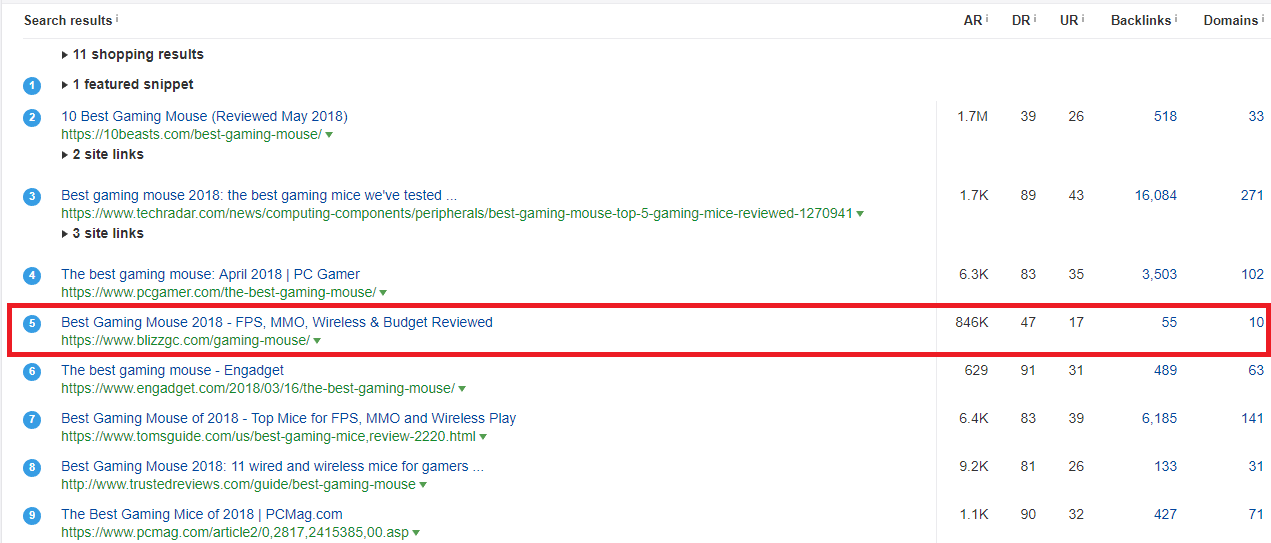

Another great example of this type of asset creation is on an affiliate website that has shot up for “Gaming Mouse” related keywords –

This content piece has begun to rank for a significant amount of it’s own keywords, whilst also “attracting” links from hyper relevant blogs and forums.

A 2,600 word, well designed and optimized guide that is highly relevant to the money page it is trying to rank. If you were Razer, this would be an excellent supporting piece to your gaming mouse category page.

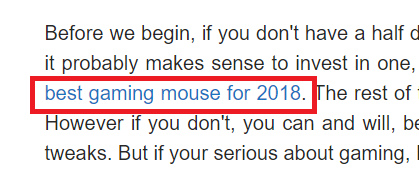

They’ve also added a fantastic anchor right at the top of the page as well –

Giving the main page even more of a push, you can see it’s ranking pretty highly for a very competitive keyword vs pages with significantly more links than it –

I put these kind of high rankings down a lot to the excellent pieces of supporting content that the site owner has created around the main page.

These types of content aren’t exactly difficult to create, are fairly inexpensive and can have a massive impact on the rankings of a site or page.

I highly recommend using the above URL hierarchy technique Nick used on this website, but you can also do content/link assets that are on there own URL structure that can internally link and pass authority to multiple pages.

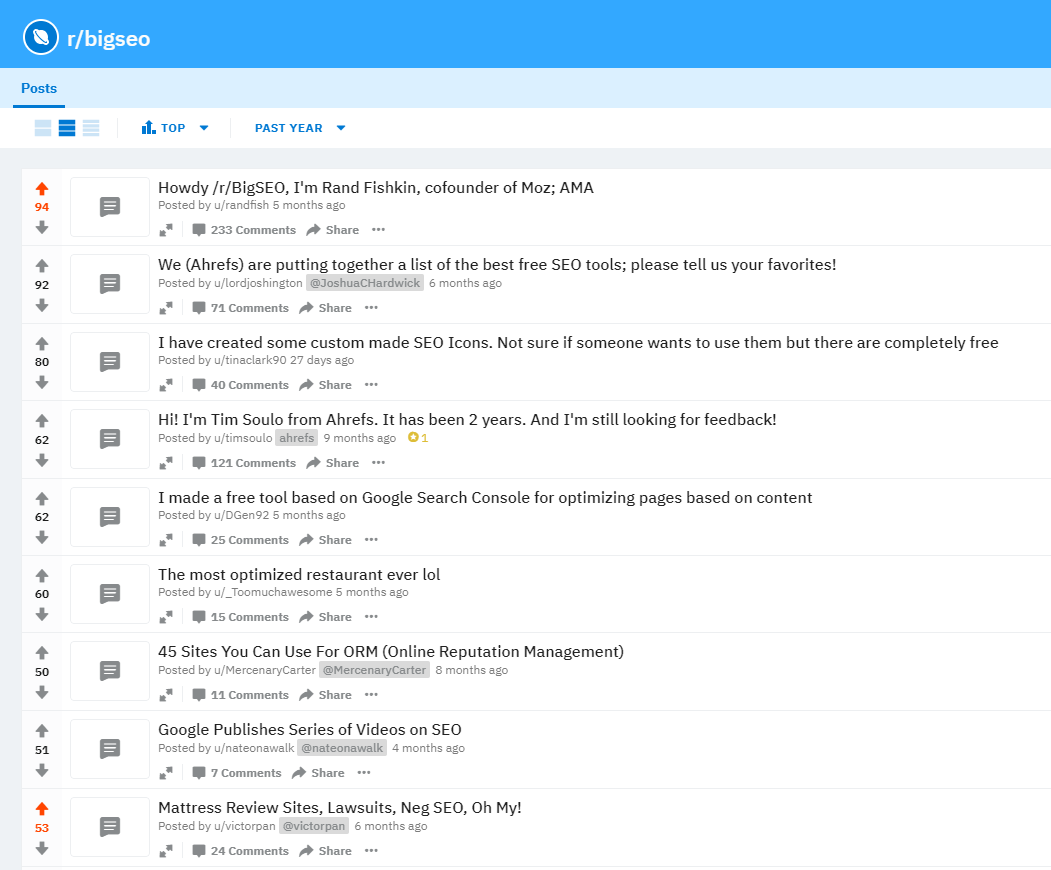

If you are finding it hard to come up with supporting content ideas, then you here’s a few techniques I’ve used in the past –

- Check the “Top” submitted posts on a relevant subreddit for content ideas.

- Use the broken link building tactic of finding 404’d pages on competing sites or industry relevant blogs. This can also be a great tactic to build links straight off the bat, as well. Ahrefs did an excellent guide here.

- Run competing sites, industry blogs or generalized topical keywords through BuzzSumo or Ahrefs Content Explorer to find highly shared content pieces that you can re-create or do the skyscraper technique on.

- Do an expert round-up of some of the biggest blogs in your industry around trends or recent industry news.

That’s just a few ways to find hundreds of content ideas in minutes. It’s really not difficult, there is so much information out there and it’s often poorly curated.

Realistically, links often won’t come naturally to these pieces.. Despite what a lot of the big, more white hat focused publications will tell you. It’s not a bad idea to look at tactics like broken link building, blogger or influencer outreach, forums, social sharing and submission sites to start getting those link counts up straight off the bat. The higher you rank for that pieces own keywords the more likely you are to attract actually natural links in the long run, as well.

Citations

Citations are really important for ECommerce stores that aren’t international and are targeting a specific country.

We don’t personally build citations inhouse, instead we use Citations Builder who do audits of both the company we’re building citations for as well as competitor’s citations. Really affordable packages as well.

For those wanting to manually do the citations themselves, then here’s a tutorial on how I go about doing citations for ECommerce sites.

The most important thing when it comes to citations is consistency. You need to make sure that every citation you build has the same data (Company name, address, website URL etc) the only thing you can change up is your business description, I actually highly recommend changing this up.. We just write a fairly large description and then use WordAi to spin it to a readable level, then use variations of that spin.

First, you want to get your list of citations. As an example, I’ll give you the top 10 best generic citations for the USA, Canada and the UK here –

UNITED STATES

- Google My Business

- facebook.com

- foursquare.com

- bingplaces.com

- citysquares.com

- ezlocal.com

- getfave.com

- hotfrog.com

- manta.com

- yelp.com

UNITED KINGDOM

- Google My Business

- facebook.com

- foursquare.com

- bingplaces.com

- cylex-uk.co.uk

- fyple.co.uk

- hotfrog.co.uk

- yelp.co.uk

- zipleaf.co.uk

- tuugo.co.uk

- misterwhat.co.uk

CANADA

- Google My Business

- facebook.com

- foursquare.com

- bingplaces.com

- yelp.ca

- hotfrog.ca

- n49.ca

- cylex.ca

- fyple.ca

- tuugo-ca.com

You can probably tell that Google My Business is always the #1 citation you can have.

You also want to find citations that are niche relevant to your industry, and citations that are geo relevant to your area.

As an example for niche citations, if you were in the furniture niche you could submit your site to the Furniture News directory: https://www.furniturenews.net/directory

As an example for geo citations, if you were in the West Midlands area in the UK (Which just so happens to be where I’m from) you can submit your business to the Midlands Index: http://midlandsindex.co.uk/

Now that you have your citations list, it’s time to start going out and creating your citations. Some will require you to fill out a form, others will require you to email in your company to them.

I don’t recommend using any paid directories unless you’re going with a large authoritative directory like Best of the Web. There are plenty of free directories to submit to out there, and you aren’t really looking to get any DoFollow links to pass juice to your site, you’re just looking to get geo authority for ranking in your countries Google TLD (As well as ranking for local maps keywords if you have a physical store on top of the online store) and some additional NoFollow links to help further balance out your link profile.

Social Profiles

If you’re building a brand new site then you want to make sure you have all of your social profiles covered, same as if you have a pre-existing site. This is a super simple tactic that helps brand presence, NoFollow/Branded anchor links, reputation management and branded SERP dominance.

We use KnowEm to scan for our brand names on over 100 different social networks. You don’t need 100 social network profiles, but having the main ones and a few additional sites also helps with the above.

You can also sort KnowEm by industry, to see related social sites to your specific industry as well, such as Travel –

It’s a really handy tool and takes just minutes.

Remember to collate all your profile URLs, usernames and passwords in your link building master sheet.

Guest Posting

Guest posting is the most traditional tactic when it comes to ranking EComm sites.. Whether you’re trying to build links to supporting pieces of content or directly to your category page, it can also be a massive headache. It’s still the most reliable way to get powerful links at a site.

There is a dispute in the SEO community between the practices you can use to acquire these links but we personally go about paying sites to link to our clients. A lot of the bigger white hat SEOs will give you the misconception that it is cheaper, safe etc to do “real content outreach”, but realistically they get very low response rates (A recent study by No Hat Digital got only 55 links from over 40,000 emails sent.. Which is a prime example of time/resource wastage.

Here’s an example of some of the recent links we got to our clients sites and the corresponding TOTAL prices including content creation and VA outreach time –

Example #1 –

- $35 Payment to Site

- $35 Writer Fee

- $3 / Hour VA Fee

Example #2 –

- $50 Payment to Site

- $35 Writer Fee

- $3 / Hour VA Fee

Example #3 –

- $100 Payment to Site

- $50 Writer Fee

- $3 / Hour VA Fee

Example #4 –

- $100 Payment to Site

- $35 Writer Fee

- $3 / Hour VA Fee

Not exactly huge amounts to acquire powerful, low OBL guest post backlinks on real, high traffic sites.

In total for this campaign, we got 15 DoFollow, high quality guest post links (All with high quality, min. 1k word posts and low OBL) for $1,150.

Note: You could also build high quality PBN Links at the tier 2 for these kinds of websites, to power them up even further.

I’ll be giving you a tutorial on exactly how to go about doing this kind of outreach below, but if you wanted to just use a service instead, then I recommend the following:

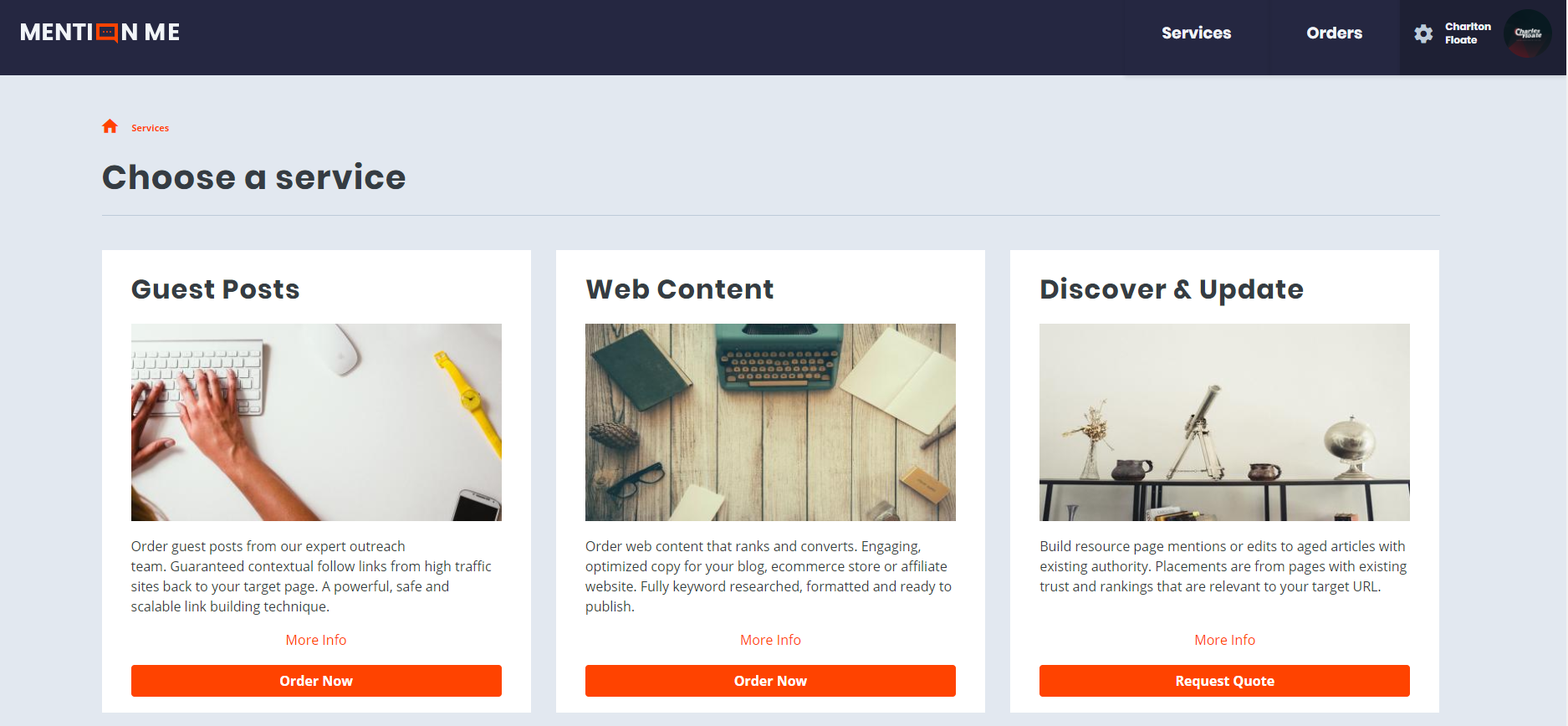

- Mention Me’s Blogger Outreach by Gareth Simpson – Best agency level guest posting service out there. Gareth has pre-existing relationships with over 10,000 sites and does custom outreach campaigns for larger projects alike. Bear in mind that Gareth’s service is private, it’s invite only so make your application appealing, these link campaigns aren’t on the cheap side either though! –

- Love To Link by Dan Parker – With a few hundred sites to pick from, Love To has an excellent array of sites to build links to and get that juice flowing.

- SERP Ninja Niche Edits – Another good way to gain links is getting links from pre-existing pages.

- Loganix Editorial Links – If you’re looking for easily accessible links on highly valuable authority sites (I’m talking Entrepreneur.com, Inc, Forbes Features & More) then Loganix has a massive list of highly regarded authority sites with a super easy to use dashboard.

All of the above services we have used on our own client sites, and can vouch for them.

How To Do Blogger Outreach

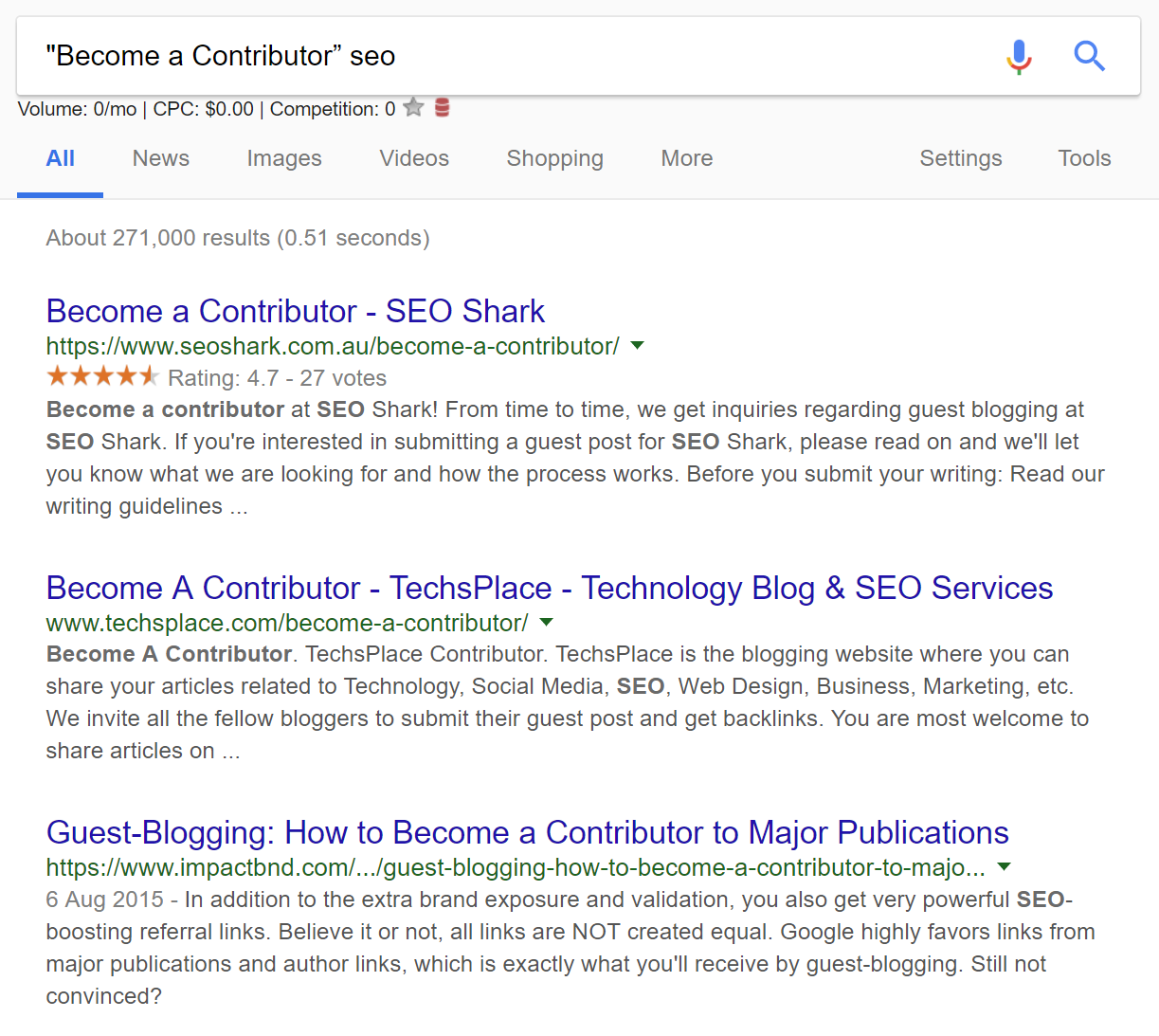

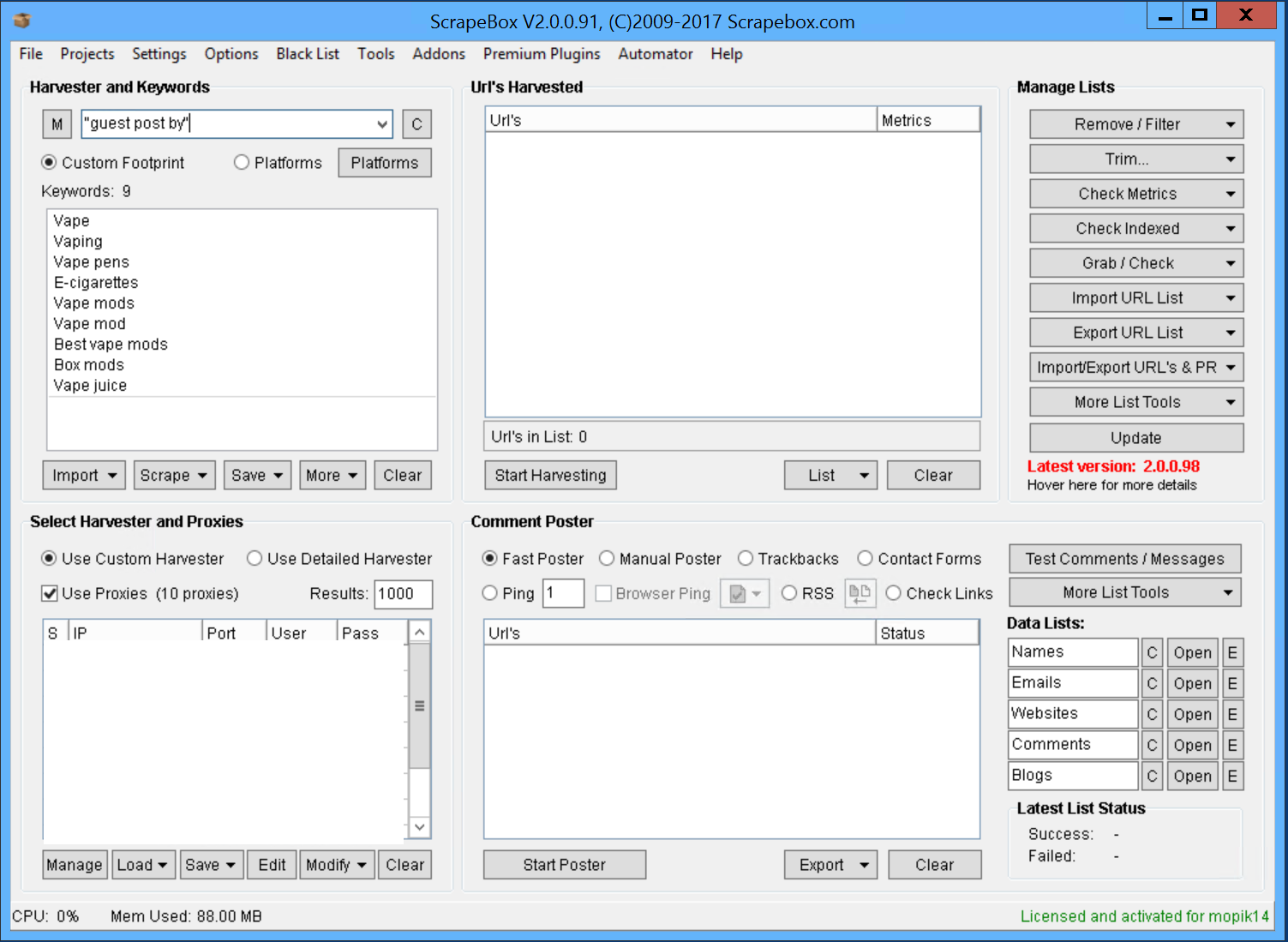

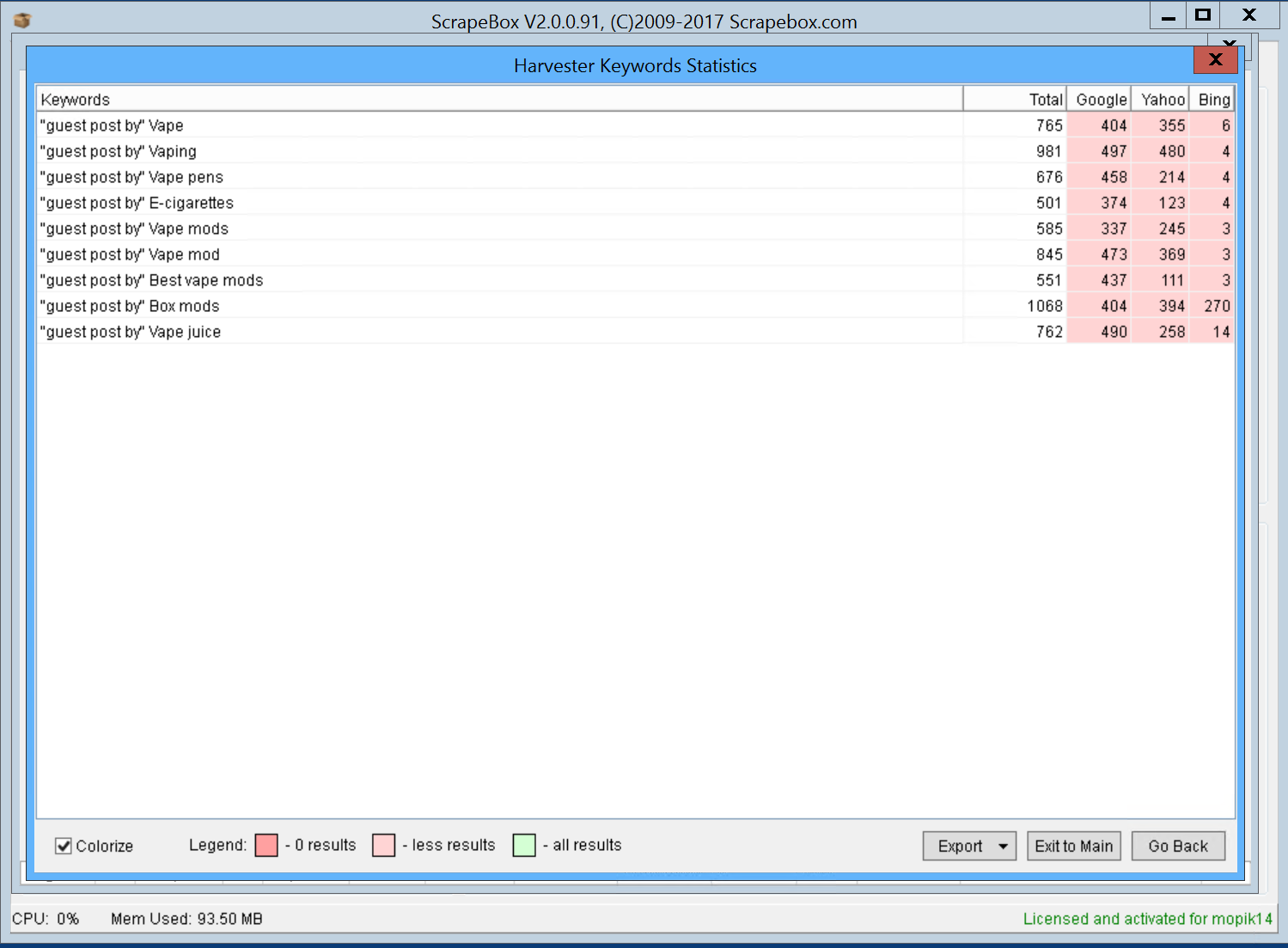

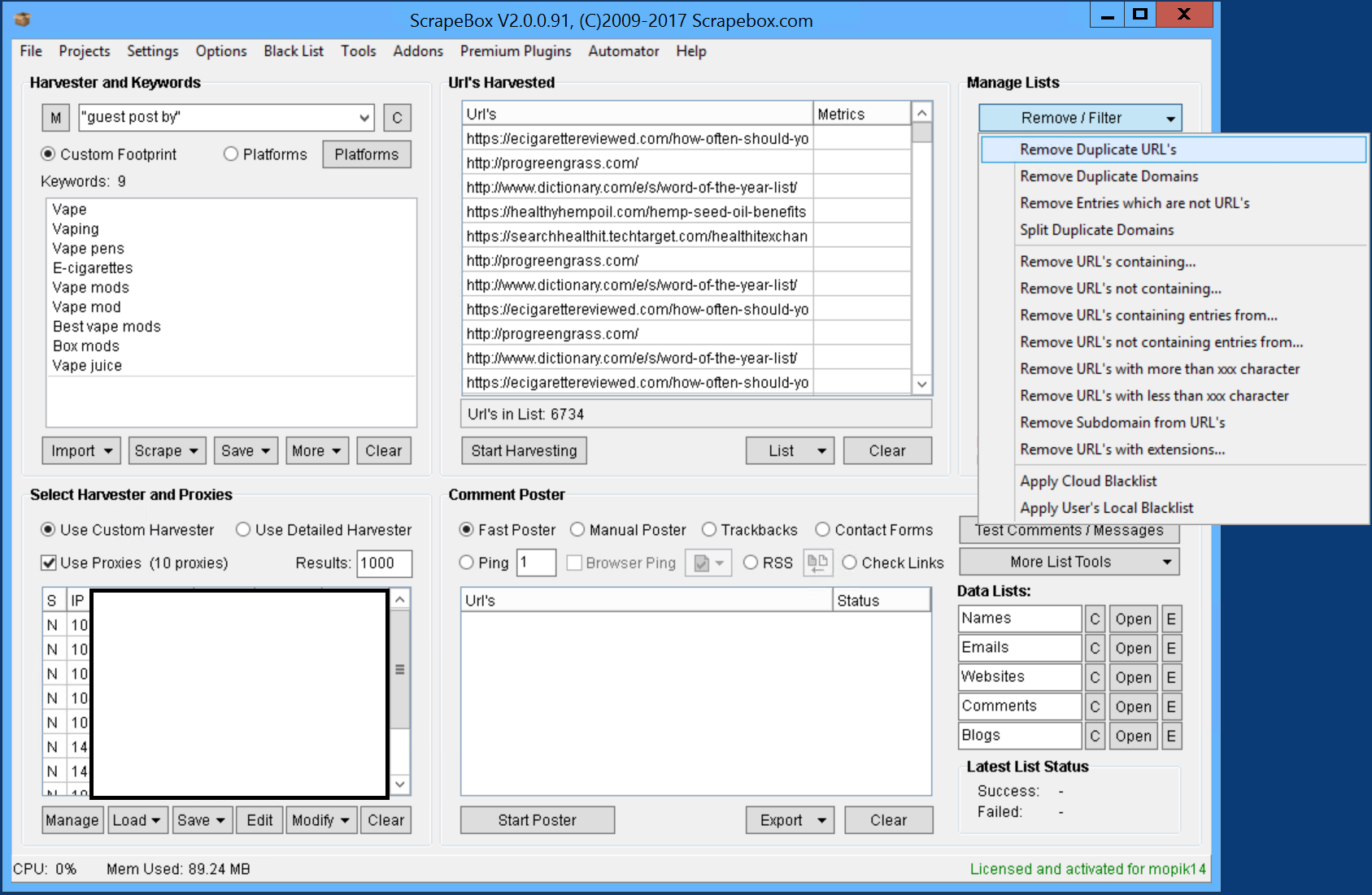

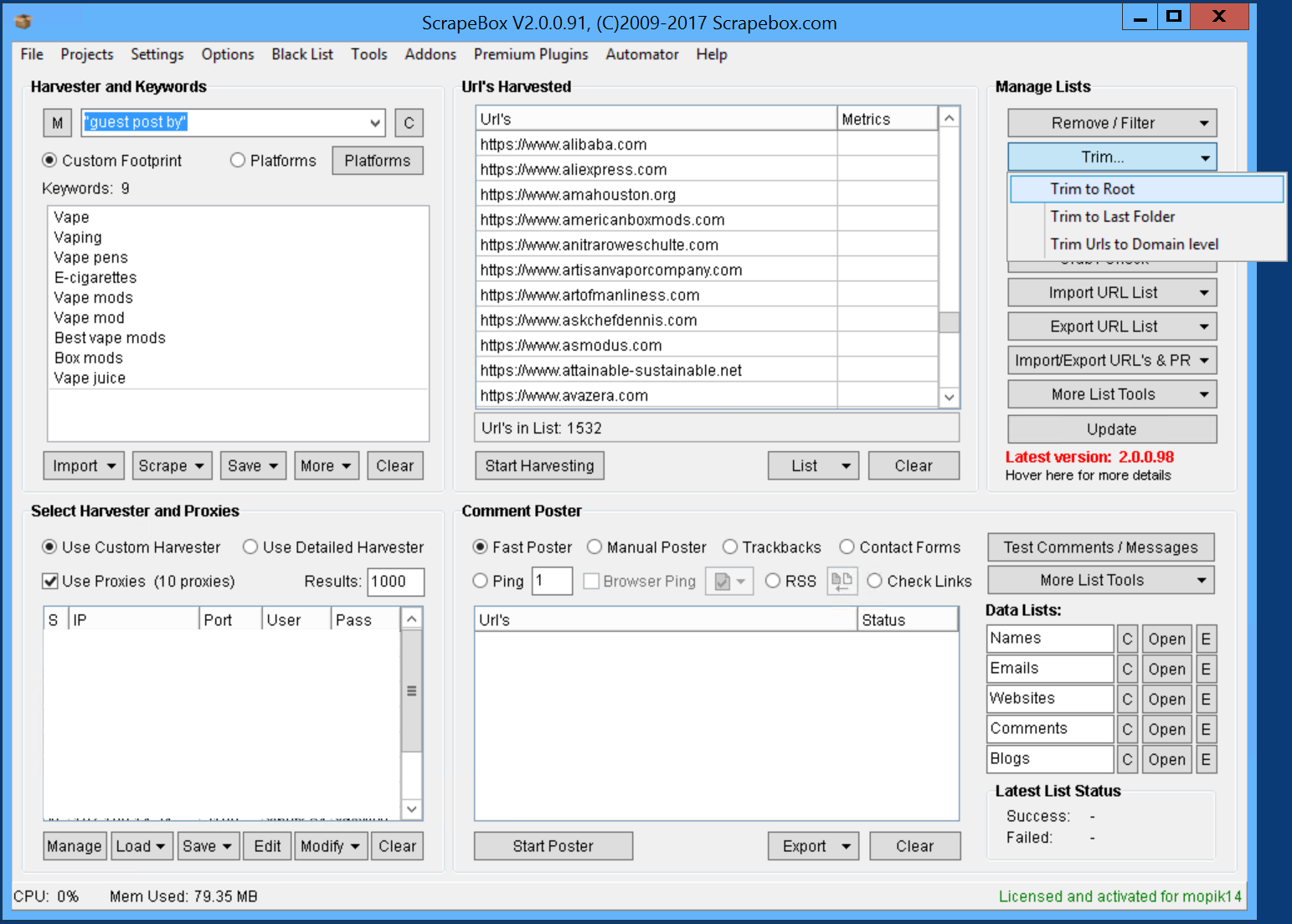

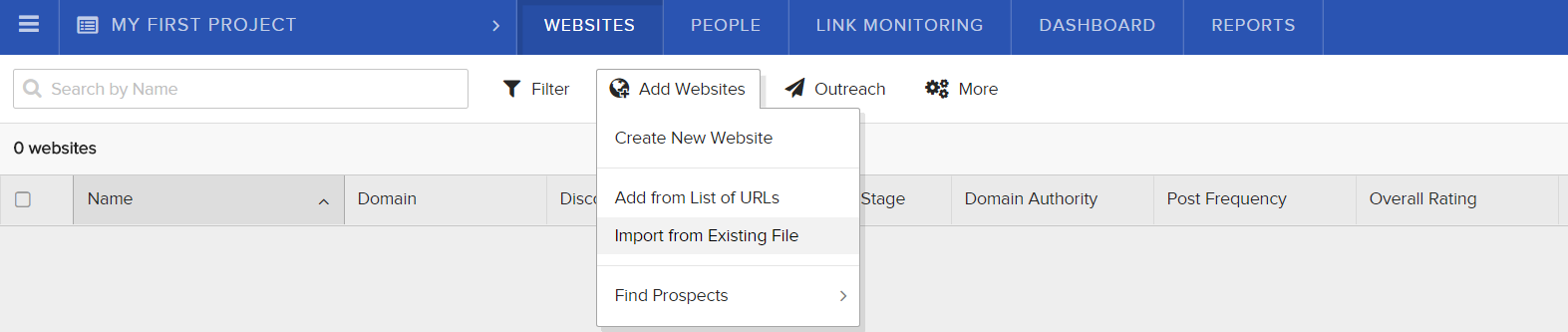

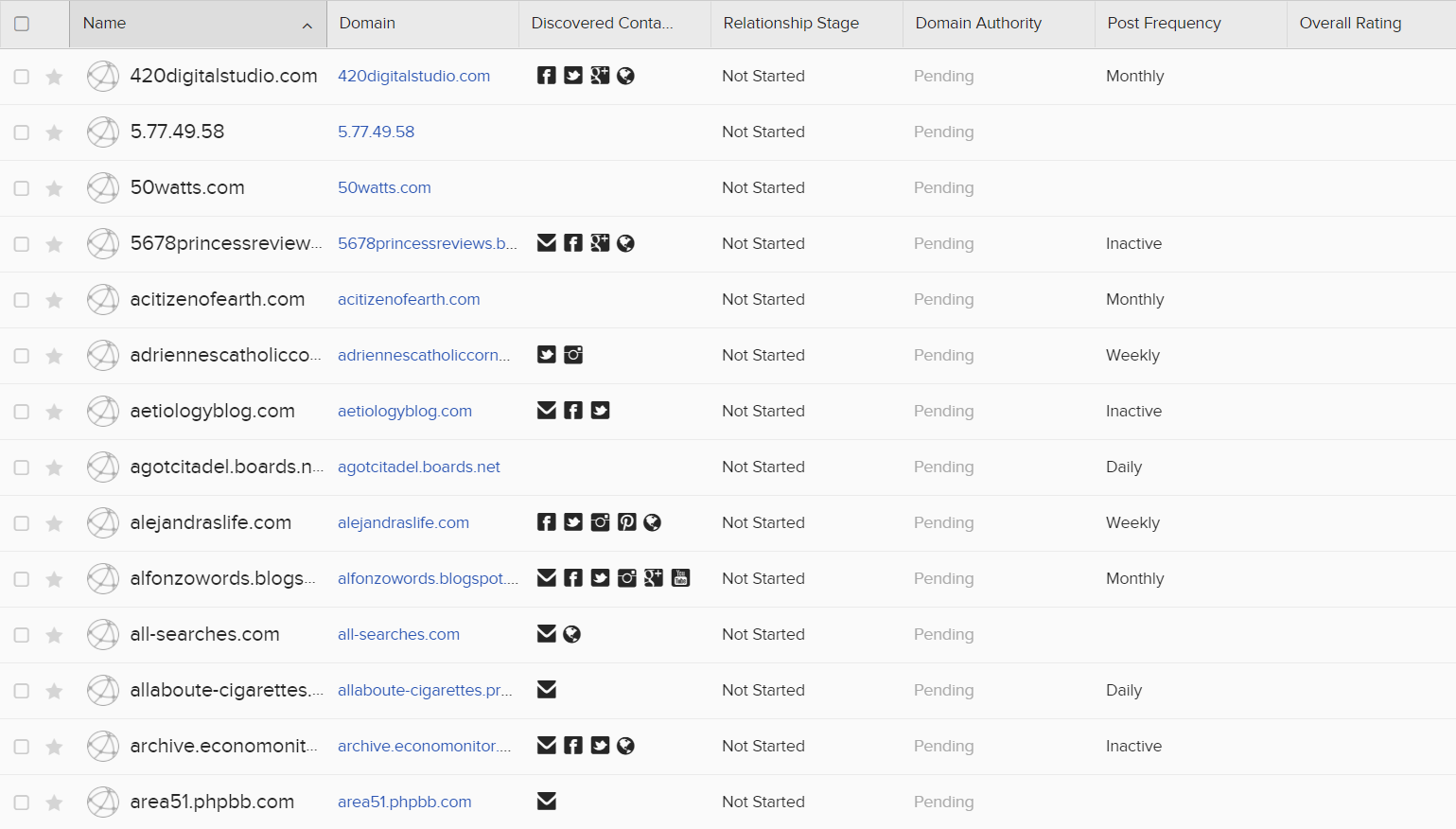

Becoming familiar with Google’s search operators will be massively helpful for the following section, I recommend looking at this guide by Moz as a starting point but you can find more advanced operators in this guide but it can be a bit dry reading. It will massively reduce your time spent searching for guest posting opportunities, as we use operators and ScrapeBox to find most of our guest posting opportunities.

Scraping Manually

Some people prefer to scrape for opportunities manually, as they believe that it saves them time in the long run as when you scrape with automated tools you often can end up with thousands of URLs that aren’t always relevant. Unless you have a well trained VA searching for manual opportunities though, you’ll be there for hours and still only get a 20% response rate.